Data centers now power the digital economy, and their success depends on balancing scale, performance, cost, energy, sustainability, and resilience through intelligent capacity planning, advanced cooling, grid-aware power use, and adaptive infrastructure.

Data centers have evolved from peripheral IT facilities into critical enablers of the global digital economy, underpinning cloud computing, artificial intelligence, digital platforms, financial systems, and emerging intelligent services. As demand accelerates and workloads become more complex, the challenge faced by enterprises and operators is no longer simple capacity expansion, but achieving balance across performance, cost, energy, sustainability, resilience, and regulatory compliance. The concept of the data center balance reflects the need to harmonize competing priorities in an environment of rapid technological and economic change.

Executive Summary

In the evolving digital economy landscape, data centers have transitioned from ancillary IT facilities to pivotal components that support cloud computing, artificial intelligence (AI), digital platforms, and advanced financial systems. As the demand for data storage and processing continues to rise, enterprises face multifaceted challenges that extend beyond mere capacity expansion. Today's focus is on achieving a strategic balance among several critical factors: performance, cost, energy consumption, sustainability, resilience, and adherence to regulatory compliance.

This balance is essential as organizations navigate an increasingly complex environment marked by rapid technological advancements and shifting economic conditions. By prioritizing and harmonizing these competing interests, data center operators can enhance their operational efficiency and contribute to a more sustainable and resilient infrastructure. These considerations will ultimately determine the effectiveness and longevity of data center operations in the face of growing demands and expectations.

Balancing Explosive Demand with Intelligent Capacity

The astronomical data volume explosion, the growing pace of cloud-based usage, and the high rate of AI-based workloads have created unprecedented challenges to data center capacity worldwide. The environment in which organizations operate is such that demand can soar out of control and is highly unpredictable due to digital platforms, real-time analytics, and applications that consume a lot of computational power. In that regard, capacity planning is no longer a long-term and static exercise but an ongoing strategy discipline.

The situation of over-provisioning infrastructure before demand is most of the time followed by capital wastages through stranded assets, under-utilization, and avoidable wastages of energy. On the other hand, under-provisioning subjects organizations to bottlenecks in performance, service downturn, and loss of revenue, especially on mission-critical digital services. The price of oversupply or undersupply has come to be so high that it should not be overlooked.

To cope with this issue, contemporary data center plans focus on modular and scalable designs where capacity can be increased in small steps and can be predicted. Regularized modules, software-defined infrastructure, and automation can help an organization ensure that expansion is very much in line with the true demand cues, as opposed to the guesswork forecasts. This strategy can maintain operational preparedness within financial flexibility.

Notably, the equilibrium in the demand capacity is not a given objective but a moving point. The balance must be sustained by continuous forecasting, real-time workload profiling, and dynamically changing infrastructure planning as the usage patterns change. Treating capacity as a dynamic environment, but not a fixed asset, data center operators can enable long-term growth, efficient use of investment, and reliable performance in the ever-data-intensive world.

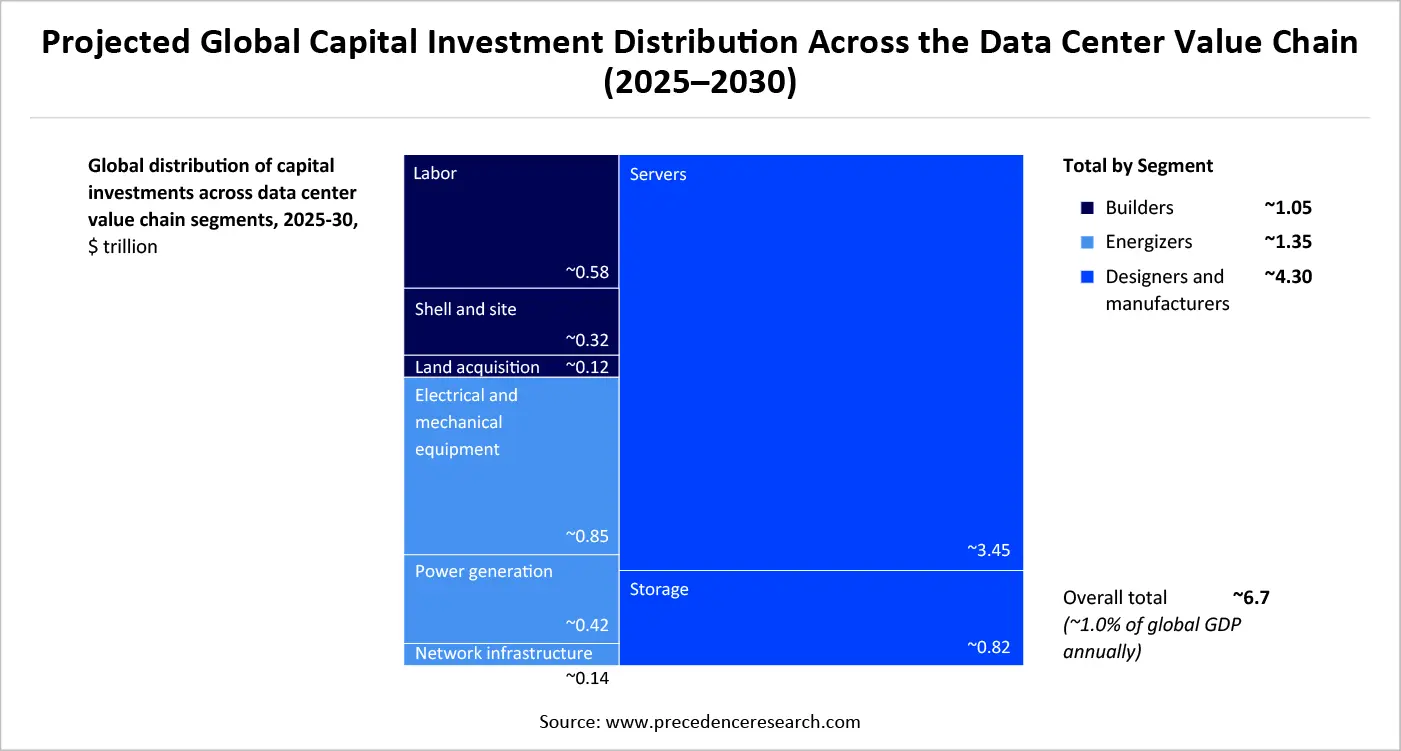

Projected Global Capital Investment Distribution Across the Data Center Value Chain (2025–2030)

Cooling Intelligence: Data Center Cooling Technology

The cooling technologies have turned out to be a key performance, reliability, and sustainability factor as data centers move to high-density, compute-intensive environments. The fast and accelerated use of AI, computing, and workloads with high performance has greatly boosted thermal emission, straining conventional cooling methodologies. Proper cooling is no longer an auxiliary service; it is a strategic facility that allows scale and resilience.

The traditional air-cooled systems were previously adequate for high-density racks with low to medium density, but are being pressured by the current power densities. Containment with hot-aisles and cold-aisles, better airflow management, and intelligent temperature controls have increased the lifespan of AI and high-performance computing, but are not enough to meet the needs of AI and high-performance computing applications. Consequently, data centers are shifting to more developed and purposeful cooling measures.

The next-generation data center design is taking liquid cooling technologies as one of its foundation stones. Direct-to-chip liquid cooling eliminates the heat generation point, enabling a much greater compute density and lowering the total energy usage. Even more efficient and uniform cooling is provided by immersion cooling, in which whole servers are dipped in thermally conductive fluids. Such solutions not only enhance thermal performance but also minimize the physical footprint of cooling infrastructures.

At the facility level, novel cooling methods, including evaporative cooling, free-air cooling, and geothermal-assisted systems, are becoming popular. These techniques help lessen the use of mechanical cooling, which consumes a lot of energy, by exploiting the ambient environmental conditions. In conjunction with improved monitoring and control systems, they allow the data centers to dynamically tune the cooling strategies according to the intensity of the workload and external climatic conditions.

Even AI is now being applied to streamline the cooling process. Thermal management systems based on AI process sensor data in real-time to forecast the heat patterns, optimize airflow, and adjust cooling devices. Such smart control enhances efficiency in energy consumption and the equipment, as well as the cost of running the equipment, which strengthens the symbiotic connection between AI workload and AI-enabling infrastructure management.

To conclude, the future of data centers revolves around cooling technologies. With increased compute density and sustainability demands, the relationship between performance, energy efficiency, and environmental impact relies significantly on advanced cooling solutions. Data centers that invest in adaptable, smart, and purposeful cooling technologies will be ideally placed to handle the forthcoming surge of digital and AI-based expansion.

Making Power Consumers Grid Partners: Data Center Incentives on Flexibility of the Grid

Clouds, AI loads, and 24/7 digital services are the most common drivers of data centers, which are rapidly becoming some of the largest consumers of electricity. Data centers are becoming not only the vast power consumers but also a possible stabilizing element of the electrical grids, as their energy requirements deepen. Data centers' incentivization is becoming an essential measure to ensure grid flexibility to balance reliability, cost efficiency, and sustainability of power systems in times of stress.

The capability of the electricity system to adjust in response to changes in supply and demand is known as grid flexibility. The location of data centers offers them a unique chance to participate in this flexibility because of their predictable loads, sophisticated energy management systems, and increased utilization of on-site generation and storage. By using suitable incentives, data centers can also redistribute workloads, adjust power demand, and maintain grid stability without affecting service performance.

Demand response programs are one of the major incentive mechanisms whereby data centers receive a financial incentive to reduce or divert power usage during peak hours. Data centers can reduce grid stress by temporarily limiting non-critical workloads, rescheduling batch processing, or using energy storage to get reduced energy prices or direct compensation. Such flexibility is becoming more possible, with advanced workload orchestration and AI-based energy management.

The other critical lever is the incentive for on-site and contracted renewable energy generation. Data centers can be encouraged to ensure their energy use matches the supply of clean energy by policies that favor the use of long-term power purchase contracts, tax breaks, or renewable energy credits. They can also provide power to the grid or be independent in times of instability when combined with battery storage or backup generation, improving the resilience of the entire system.

The additional incentives to change consumption patterns are time-of-use pricing and dynamic tariffs that encourage data centers to change their ways of consumption. Real-time electricity prices allow operators to optimize the time and location of compute-intensive tasks. This economic signal converts flexibility of energy into a competitive advantage and rewards information centers that make investments in smart energy optimization and distributed infrastructure.

Besides, grid-supportive data centers can be identified in regulatory frameworks as key infrastructures. The promises of load flexibility, energy efficiency, and grid participation can be linked with fast-track permitting, grid connection priority, and infrastructure subsidies. This type of policy congruence promotes cooperation among utilities, regulators, and data center operators instead of hostile capacity negotiations.

Finally, encouraging grid flexibilities by incentivizing data centers constitutes a move towards a consumption-focused model of relationships to an energy ecosystem of partnerships. Data centers can stabilize grids, integrate renewable energy as well, and reduce the costs of the system-wide by aligning economic incentives with technological capability. Such a balanced solution will mean that the development of digital infrastructure will not weaken, but rather strengthen the energy infrastructure it relies on.

What is the Data Center Market Size?

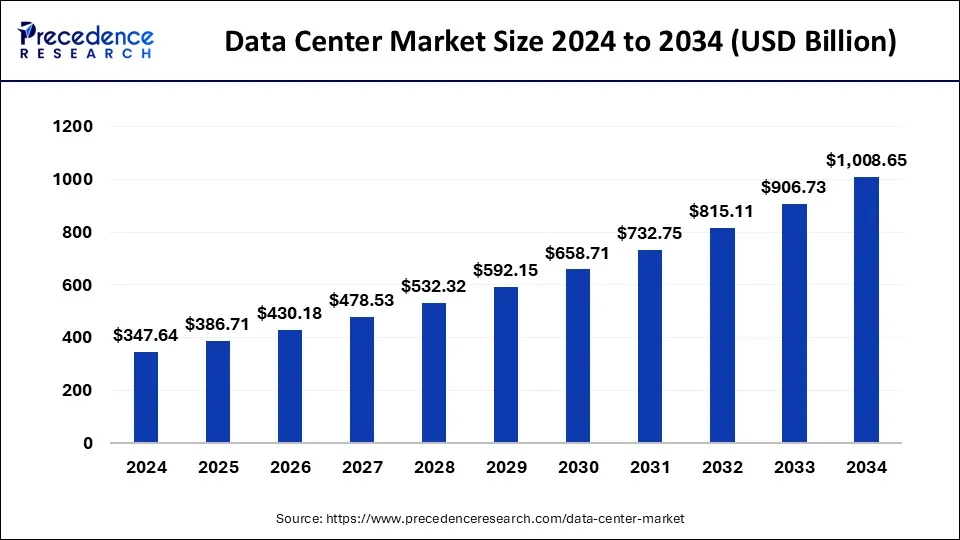

The global data center market size is estimated at USD 386.71 billion in 2025 and is predicted to increase from USD 430.18 billion in 2026 to approximately USD 1,103.70 billion by 2035, expanding at a CAGR of 11.06% from 2026 to 2035.

Market Highlights

- North America dominated the global market with the largest market share of 41% in 2025.

- Asia Pacific is estimated to expand at the fastest CAGR between 2026 and 2035.

Mechanisms of Data Center Flexibility: Responsive and Grid-Aware Operations

With the increase in the scale and energy intensity of data centers, flexibility mechanisms have become necessary to strike a balance between performance needs and power system constraints. With these mechanisms, data centers can dynamically change the consumption patterns, provide grid stability, and lower operational costs - without affecting service reliability. They are turning data centers into amorphous energy consumers into fluid and smart infrastructure assets.

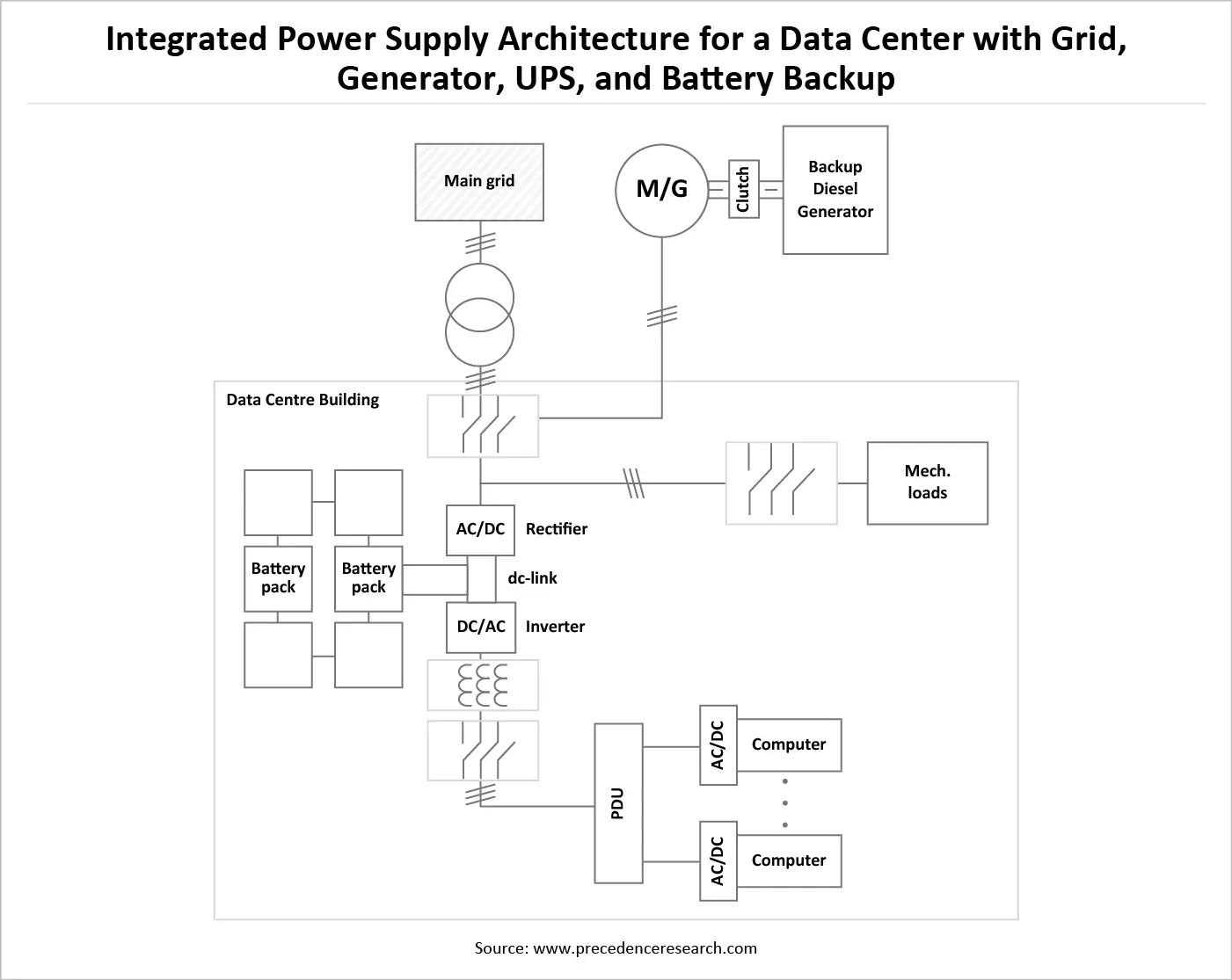

The data center's flexibility depends on Uninterrupted Power Supply (UPS) systems. Modern UPS systems (which may include battery storage) are traditionally designed to provide continuity during outages but also provide short-term load shifting and grid services. When demand on the grid is high or there are grid disturbances, the UPS batteries can be used temporarily to power critical loads and ease the instantaneous load to the grid. This feature offers fast-response flexibility and grid resilience, as well as high uptime demands.

Workload deferral, also known as retouching jobs and termed as reprocessing flexibility, is the act of postponing or reallocating non-time-sensitive jobs. Examples are data backups, analytics recalculations, rendering, or batch processing. These employment opportunities can be postponed or moved to times when electricity demand is lower, or the renewable energy is more abundant. The resulting data centers are better temporally flexible, decoupling such workloads to run at opportune times of the day when energy is more favorable.

The main facilitator of flexibility in contemporary data centers is smart scheduling. Through intelligent orchestration platforms, workloads are dynamically distributed over time, location, and infrastructure due to energy prices, grid conditions, and performance requirements. Intelligent scheduling can be used to run computing-intensive tasks when power is cheaper or cleaner, with latency-sensitive services kept first. This process transforms the awareness of energy into an effective optimization switch.

Clock rate modulation, also known as dynamic frequency scaling, enables servers to modulate processor speeds based on power or thermal limits. Data centers can make significant power savings by briefly slowing down CPU clock speeds during a grid stress event without causing much loss of service quality. The high degree of control offers reversible, rapid flexibility, which is most useful in short-term grid balancing.

Pre-cooling is a thermal flexibility system that takes advantage of the thermal inertia of data center environments. It allows the facilities to be cooled to lower than nominal operating temperatures before they are likely to be in high demand. Cooling systems can also be lowered to a throttled state during peak, without sacrificing safe operation levels. Pre-cooling is a viable way to move energy consumption in time without impacting compute availability.

Flexible job features are job loads that are tolerant to the variability in the performance, place, or time. Flexibility strategies are especially appropriate in jobs that have slack scheduling, can run in parallel, or have geographic mobility. By categorizing workloads in terms of the flexibility properties, data centers could systematically determine load shifting, throttling, or migration opportunities that allow maximum responsiveness with the least operational risk.

The mining of cryptocurrency is a drastic form of computing. Mining workloads are very energy-consuming but not very sensitive to latency and scheduling delays. Consequently, they are easily scalable up and down in accordance with the grid conditions or energy prices. Although this is not a fundamental activity of most enterprise data centers, cryptocurrency mining presents an example of how compute loads can be highly elastic demand resources in power systems.

Collectively, these flexibility mechanisms allow data centers to actively participate in energy markets, contribute to grid stability, and enhance sustainability outcomes. A combination of power-sensitive technology and smart workload management can bring a new equilibrium to data centers and guarantee continuity in digital services, as well as make the energy infrastructure more flexible and resilient.

Data Center Tier Levels: Structuring Reliability, Availability, and Resilience

Data center tier levels provide a standardized framework for classifying facilities based on their infrastructure robustness, fault tolerance, and uptime performance. Tier classification helps organizations align data center design and investment with business criticality, risk tolerance, and service availability requirements. As digital services become increasingly mission-critical, tier levels play a central role in balancing cost, reliability, and operational resilience.

Tier I: Basic Capacity

Tier I data centers represent the most fundamental level of infrastructure. They typically include a single path for power and cooling and minimal redundancy. Planned maintenance or unexpected failures can result in downtime, as systems must be shut down for repairs. Tier I facilities are suitable for non-critical workloads, development environments, or small organizations with limited availability requirements and cost constraints.

Tier II: Redundant Capacity Components

Tier II facilities introduce redundancy at the component level, such as backup power generators, UPS systems, and cooling units. While still relying on a single distribution path, these data centers offer improved reliability and reduced risk of disruption compared to Tier I. However, maintenance activities can still cause service interruptions. Tier II is often appropriate for businesses requiring moderate availability without the complexity and cost of higher-tier designs.

Tier III: Concurrently Maintainable Infrastructure

Tier III data centers are designed for high availability and operational continuity. They feature multiple power and cooling paths, with at least one active path always. This allows maintenance and equipment replacement to occur without shutting down operations. Tier III facilities are widely adopted by enterprises, cloud providers, and colocation operators supporting mission-critical applications that require strong uptime guarantees and operational flexibility.

Tier IV: Fault-Tolerant Infrastructure

Tier IV represents the highest level of data center resilience. These facilities are fully fault-tolerant, with redundant systems and distribution paths capable of sustaining operations even during unplanned failures. Any single component failure does not impact service availability. Tier IV data centers are designed for environments where downtime is unacceptable, such as financial services, national infrastructure, and large-scale cloud platforms supporting critical digital services.

Choosing the appropriate tier level is a strategic decision that balances availability requirements, risk exposure, and investment cost. Higher tiers deliver greater resilience but require significantly higher capital and operational expenditure. Increasingly, organizations adopt hybrid strategies, deploying Tier III or Tier IV infrastructure for critical workloads while using lower-tier facilities for less sensitive applications.

In the era of cloud computing, AI, and distributed architectures, tier levels remain relevant but are complemented by software-based resilience, geographic redundancy, and workload portability. Together, these approaches redefine reliability as a system-level outcome rather than a property of a single facility. In essence, data center tier levels provide a structured foundation for reliability, enabling organizations to align infrastructure design with business priorities and the evolving demands of the digital economy.

Integrated Power Supply Architecture for a Data Center with Grid, Generator, UPS, and Battery Backup

Future of the industry

The future of the data center sector is promising, driven by a surge in AI-based data centers to overcome potential challenges faced by conventional infrastructures. Data centers witness a demand surge owing to the extensive use of data, computing, and digitalization, along with the scaling of new technologies. The allocation of financial resources and a close linkage with the power sector present future opportunities for data centers. Continuous advances in generative AI technologies are believed to generate ample amounts of data, potentiating the need for data centers to manage complex data.

Enterprises and investors prefer a modularized construction that can speed up the build-out of data centers and promote sustainable construction practices. By building data centers at new and atypical locations, enterprises can benefit from cheaper, available power and carbon-free infrastructure. Hyperscalers are focusing on building data centers in tier-2 and tier-3 cities of emerging economies, driving the future of a digital ecosystem. This is supported by a growing metropolitan population, maturing fiber infrastructure and network connectivity, abundant utility access, and supportive regulatory environments.

The demand for clean power is also expected to rise gradually in the near future to keep pace with sustainable environmental targets. Data centers fulfil their energy requirements through offshore wind, fission, fusion, and geothermal sources; however, new clean generation can be derived from solar and onshore wind. This may help sustain and control high PPA rates for renewable-energy providers.

Conclusion

In conclusion, the evolution of data centers from auxiliary IT facilities to central players in the digital economy signifies a paradigm shift that demands a nuanced approach to capacity management, performance, and sustainability. As data volumes soar and workloads become increasingly complex, the challenge for enterprises lies in achieving a delicate balance among performance, energy efficiency, cost, sustainability, resilience, and regulatory compliance. This balance is not a static goal but a continuous endeavor that requires adaptive strategies, ongoing forecasting, and innovative technologies.

By integrating intelligent capacity planning and advanced cooling technologies, data centers can better meet the demands of today’s environment while minimizing wasted resources and maximizing operational efficiency. Embracing modular designs and liquid cooling solutions will not only enhance performance but also contribute to long-term sustainability goals.

Ultimately, the future of data centers will be defined by their ability to adapt to rapid technological changes while maintaining efficiency and resilience. As organizations navigate these challenges, a proactive and intelligent approach to data center operations will be paramount in fostering a sustainable digital infrastructure that can support the burgeoning demands of the digital age.

About the Authors

Aditi Shivarkar

Aditi, Vice President at Precedence Research, brings over 15 years of expertise at the intersection of technology, innovation, and strategic market intelligence. A visionary leader, she excels in transforming complex data into actionable insights that empower businesses to thrive in dynamic markets. Her leadership combines analytical precision with forward-thinking strategy, driving measurable growth, competitive advantage, and lasting impact across industries.

Aman Singh

Aman Singh with over 13 years of progressive expertise at the intersection of technology, innovation, and strategic market intelligence, Aman Singh stands as a leading authority in global research and consulting. Renowned for his ability to decode complex technological transformations, he provides forward-looking insights that drive strategic decision-making. At Precedence Research, Aman leads a global team of analysts, fostering a culture of research excellence, analytical precision, and visionary thinking.

Piyush Pawar

Piyush Pawar brings over a decade of experience as Senior Manager, Sales & Business Growth, acting as the essential liaison between clients and our research authors. He translates sophisticated insights into practical strategies, ensuring client objectives are met with precision. Piyush’s expertise in market dynamics, relationship management, and strategic execution enables organizations to leverage intelligence effectively, achieving operational excellence, innovation, and sustained growth.

Request Consultation

Request Consultation