Shared Perceptions: How Multimodal LLMs and the Human Brain Represent Objects Similarly

A recent study conducted by researchers at the Chinese Academy of Sciences aimed to explore methods for multimodal LLMs to represent objects and investigate whether a similar pattern is followed by the sensory cortex in the human brain that represents objects in the outside world. Their results are published in Nature Machine Intelligence.

Such resemblances between technology and human brain functioning have been debated topics among science fiction readers, writers, scientists, and thinkers. Thus, to better understand the relationship between these two distinct areas, where the human is at the center as both creator and observer, this research and its findings have significant implications in fields such as neuroscience, computer science, and psychology. Text and images created with multimodal large language models, such as ChatGPT, are highly similar to human-created content, which raises a fierce curiosity about the underlying functioning of these models.

Changde Du and Kaicheng Fu wrote in their paper, “Understanding how humans conceptualize and categorize natural objects,” which offers critical insights into perception and cognition. "With the advent of large language models, a key question arises: these models develop human-like object representations from linguistic and multimodal data?”

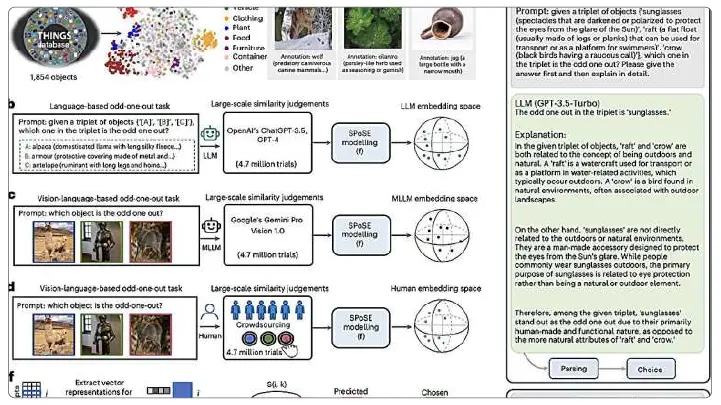

To explore the possibility behind this fundamental question, researchers have combined behavioral and neuroimaging analysis of object concepts in LLMs and the human brain. For this, they used object representations shown by LLM ChatGPT 3.5 from OpenAI and multi-modal LLM Gemini Pro Vision 1.0 from Google DeepMind. A simple task, known as triplet judgments, was performed with these models by asking them to recognize two similar objects among three presented objects.

By using this, researchers have collected 4.7 million judgments about these triplets made by LLMs while processing the task. They derive low-dimensional embeddings that capture the similarity of object structures. Embeddings are mathematical representations that highlight similar patterns between objects across various dimensions, thereby placing similar objects closer to each other in an abstract space.

Surprisingly, the results show that 66-dimensional embeddings were predictable, highly confirmed, and demonstrated semantic clustering similar to that of human mental representations. Further, the team mentioned in their write-up, “Further analysis showed strong alignment between model embeddings and neural activity patterns in brain regions such as the extra-striate body area, parahippocampal place area, retro-splenial cortex, and fusiform face area.” This statement strongly supports the concept of similarity between human conceptual knowledge and LLMs' recognition of objects. Not exactly, but similar processes have been recorded. In the foreseeable future, this finding could serve as a foundation for potential technological advancements, such as brain-inspired AI systems, to assist humans more effectively and collectively.