Data Center GPU Market Size and Growth 2025 to 2034

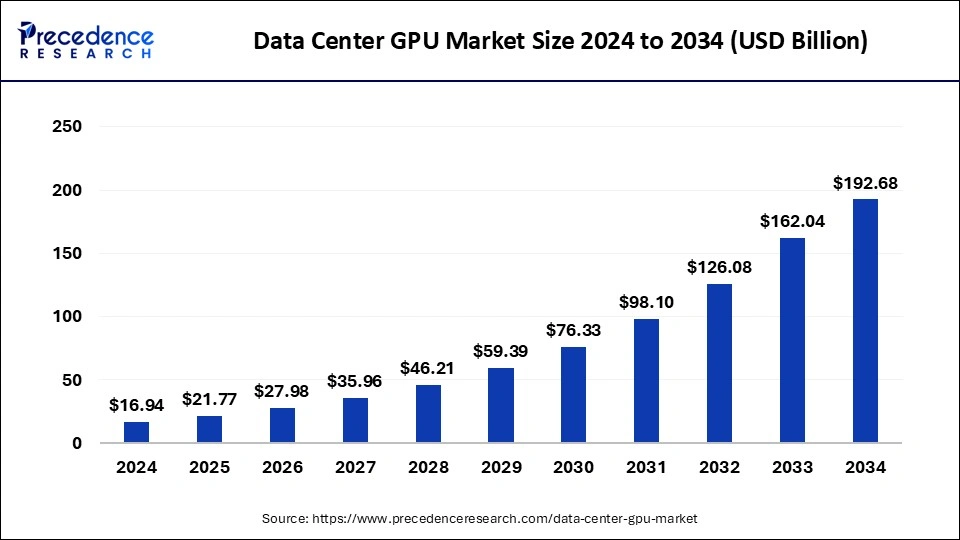

The global data center GPU market size was estimated at USD 16.94 billion in 2024 and is predicted to increase from USD 21.77 billion in 2025 to approximately USD 192.68 billion by 2034, expanding at a CAGR of 27.52% from 2025 to 2034.Increasing demand for artificial intelligence and growing PC gaming and gaming console industries are the key drivers for the growth of the data center GPU market.

Data Center GPU Market Key Takeaways

- In terms of revenue, the global data center GPU market was valued at USD 16.94 billion in 2024.

- It is projected to reach USD 192.68 billion by 2034.

- The market is expected to grow at a CAGR of 27.52% from 2025 to 2034.

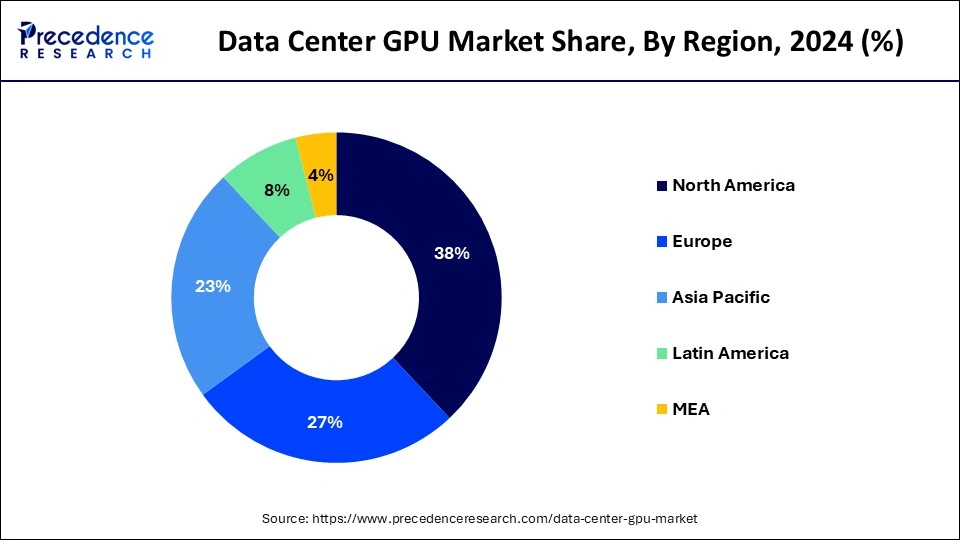

- North America dominated the market with the largest revenue share of 38% in 2024.

- Asia Pacific is expected to witness the fastest growth in the market over the forecast period.

- By deployment model, the on-premises segment has held a major revenue share of 59% in 2024.

- By deployment type, the cloud segment is projected to grow at the fastest rate in the market over the forecast period.

- By function, the training segment has contributed more than 66% of revenue share in 2024.

- By function, the inference segment is projected to gain a significant share of the market in the upcoming years.

Data Center GPU Market Size and Growth 2025 to 2034

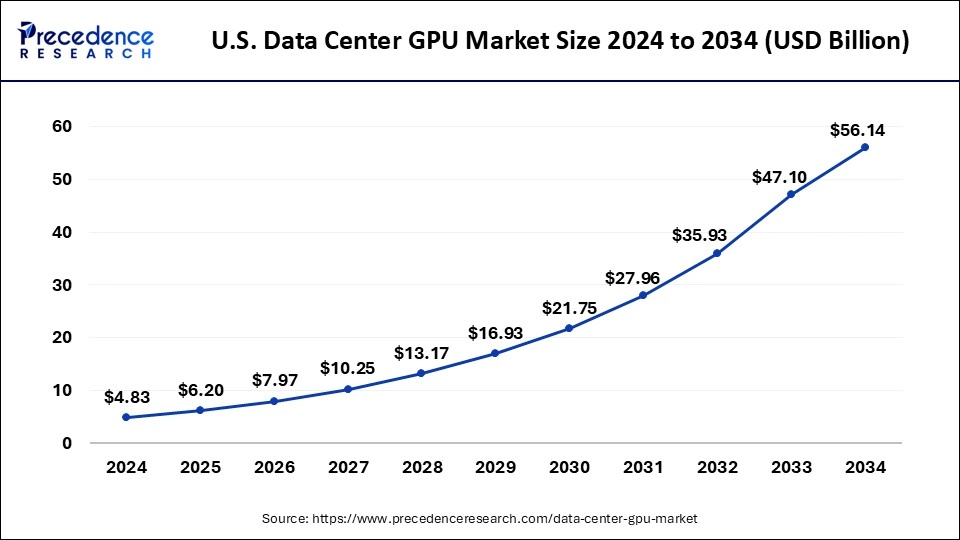

The U.S. data center GPU market size reached USD 4.83 billion in 2024 and is predicted to attain around USD 56.14 billion by 2034, at a CAGR of 27.80% from 2025 to 2034.

North America dominated the data center GPU market in 2024. The data center GPU market in America is thriving due to technological advancements and advanced research models based on high-end GPUs. Key factors influencing this market include high-tech industries, extensive research and development efforts, and rising investments in artificial intelligence and machine learning applications. The growth is particularly driven by the growing adoption of cloud services by businesses across various sectors, especially in the United States and Canada.

- In May 2024, Nscale, in collaboration with AMD, launched its high-performance GPU cloud, marking a significant advancement in cloud computing services. Originally spun out from Arkon Energy, a leading 300MW data center and hosting business in North America, Nscale is now setting a new standard for AMD technology-focused cloud solutions.

- In September 2023, Advantech announced the acquisition of a 100% ownership position in BitFlow, Inc., a North American business specializing in AI machine vision and high-end picture collection. In the future, Advantech plans to expand BitFlow's fundamental technology for high-speed image acquisition as well as its broad product line and market basis.

Asia Pacific is expected to witness the fastest growth in the data center GPU market over the forecast period. The data center GPU market in the region is mainly driven by the rapidly expanding IT and telecommunications sector, the growing eCommerce industry, and an increased focus on AI and ML applications. Furthermore, advancements in data center GPUs are expected to propel the global market growth. The collaboration of these factors contributes significantly to the rising demand for data center GPUs. The increasing adoption of cloud computing by businesses in the Asia Pacific region will further boost the demand for data center GPUs in this area.

- In January 2024, Singapore Telecommunications announced a tie-up with Nvidia to deploy artificial intelligence capabilities in its data centers across Southeast Asia as data demand in the region booms on the back of the growing digital economy.

Market Overview

Graphic processing units (GPUs) are widely used in data centers due to their powerful parallel processing capabilities, making them ideal for applications like scientific calculations, machine learning, and big data processing. Unlike central processing units (CPUs), GPUs perform more complex calculations by utilizing parallel processing, where multiple processors handle the same task simultaneously. Each GPU has its own memory to store the data it processes.

A crucial aspect of AI and machine learning (ML) is the training of advanced neural networks, which is significantly accelerated by GPU servers. Companies in the data center GPU market have seen increased demand for their GPU products, such as the Nvidia A100 Tensor Core GPU, specifically designed for AI tasks. The global data center GPU market is expanding as various industries, including healthcare, finance, and autonomous vehicles, adopt GPU servers to manage large datasets and improve the accuracy of AI models.

Data Center GPU Market Growth Factors

- Rising consumer demand and shifting consumer preferences are expected to drive the data center GPU market growth.

- The emergence of more efficient products in the industry can fuel the data center GPU market growth.

- Investments from key industry players will likely help the market grow during the forecast period.

Market Scope

| Report Coverage | Details |

| Market Size by 2034 | USD 192.68 Billion |

| Market Size in 2025 | USD 21.77 Billion |

| Growth Rate from 2025 to 2034 | CAGR of 27.52% |

| Largest Market | North America |

| Base Year | 2024 |

| Forecast Period | 2025 to 2034 |

| Segments Covered | Deployment Model, Function, End-user, and Regions |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Market Dynamics

Driver

Rising adoption of multi-cloud strategies

The increasing adoption of multi-cloud strategies and network upgrades to support 5G is significantly driving the data center GPU market growth. Multi-cloud involves using two or more cloud computing services to deploy specific application services. Businesses are adopting multi-cloud architectures to prevent data loss or downtime from localized failures, ensure compliance with security regulations, and meet workload requirements. This approach also helps avoid dependence on a single cloud service provider. Moreover, Investments in communication network infrastructure are rising to support the transition from 3G and 4G to 5G.

- In October 2023, IBM introduced the new IBM Storage Scale System 6000, a cloud-scale global data platform designed to meet today's data-intensive and AI workload demands and the latest offering in the IBM Storage for Data and AI portfolio.

Restraint

Economical barriers

The economies of scale achieved by GPU manufacturers create a substantial barrier for data center GPU server manufacturers considering backward integration. A company attempting to produce its GPUs would struggle to match these economies of scale. This challenge affects the company's ability to remain competitive, invest in research and development, and offer competitive pricing. Data center GPU server manufacturers may find it difficult to attain similar economies of scale, which can limit their effectiveness in the highly competitive data center GPU market.

Opportunity

Advancements in server technology

Advancements in server technology to support machine learning (ML) and deep learning (DL) are emerging trends that are driving market growth. Data is crucial for an enterprise's decision-making process, and success depends on effective data analysis. Companies are increasingly using advanced technologies like big data, ML, and DL to analyze large datasets. To meet the computing demands of high-performance computing (HPC) workloads, data center GPU market companies are introducing servers with field-programmable gate arrays (FPGAs), application-specific integrated circuits (ASICs), and GPUs.

- In February 2024, Cisco and NVIDIA announced plans to deliver AI infrastructure solutions for the data center that are easy to deploy and manage, enabling the massive computing power that enterprises need to succeed in the AI era.

GPU type Insights

The discrete GPUs segment covered the largest share of 67.40% in the data center GPU market in 2024. The discrete GPUs are a robust type, a different graphics processing unit that improves performance for critical and popular tasks. The discrete GPUs are important for applications such as cloud gaming, computing, and AI training. It provides incredible memory and processing strength. It features a significant standalone component that relies on its customized memory (VRAM). It helps maintain and store huge amounts of data for large companies.

The hybrid GPUs segment is expected to accelerate at a CAGR of 9.30% during the forecast period. The hybrid system offers emergency and comfort alternatives to many options. The merging of GPU and CPU resources together handles mountain-sized workloads, mainly those type of work that needs skilled computing and equally processing ability. The hybrid system is a multi-purpose approach that strengthens two processor types at a time. The computational, technical operations, and general-purpose tasks are well aligned and accomplished due to the hybrid system. It is developing and enhancing its integrational functionality to compete in the data center GPU industry.

Application Insights

The AI and machine learning (ML) segment held the largest share of 38.90% in the data center GPU market in 2024. Artificial intelligence and machine learning have significantly expanded across many markets, competing by driving growth. AI and ML are transforming data center operations by enhancing efficiency and security. The forecast maintenance analysis and several tools embedded in the applications have optimized performance. The AI tools interact and meet the needs of the global data center GPU industries like never before.

The blockchain and cryptocurrency mining segment is expected to grow at a CAGR of 10.10% during the forecast period. The crypto mining underwent layers of transition. The data centers have identified major issues regarding traditional mining setups and have engaged in crypto mining. The blockchain is beneficial in terms of security and delivering quick data retrieval. The steady and progressive features of these applications are paving the way for data centers to develop and integrate with the same.

End-user Insights

The cloud service providers (CSPs) segment held the largest share of 45.60% in the data center GPU market in 2024. The cloud service providers' global market is largely dominated by prominent players like Microsoft Azure, Google Cloud Platform (GCP), and Amazon Web Services (AWS). The cloud service providers help to access computing resources in real-time through the internet, which saves physical visit time. It has largely supported data centers to focus on complex tasks and maintained the cloud service providers' position at first.

The financial services segment is expected to grow at a CAGR of 8.80% during the forecast period. The financial services are steadily becoming the key start for most of the data centers after facing certain challenges and fraud through the vast data spectrum. The development and the need to dilute risk management are essential for a data center's operational department to ensure accuracy.

Data Center Type Insights

The hyperscale data center segment held the largest share of 53.10% in the data center GPU market in 2024. The hyperscale data centers are immensely vast and built to manage and update huge data. It contributes significantly to the large-scale online services and cloud computing. It attains 5,000 servers daily, elaborating on the efficiency and effectiveness of the services, declaring this center to be the most considerate and reliable among the large companies and businesses.

The colocation data centers tend to grow at a CAGR of 8.90% during the forecast period. The colocation data centers don't build their facilities but head with their facilities in the businesses where their IT infrastructure, like networking equipment and servers, is recognized. The idea of holding and developing their remarkable skill is noticeable to the data center GPU industries.

GPU architecture Insights

The CUDA (NVIDIA's GPU architecture) segment covered the largest share of 70.30% in the data center GPU market in 2024. The computing capability refers to the hardware features and support instructions for every NVIDIA GPU architecture. CUDA is an emerging computing architecture that enables the performance of NVIDIA's cutting-edge graphics processor technology to generalize the intention of GPU computing. This impressive architecture, with its unique and complex ideologies, has propelled the global data center GPU industry.

The Xe architecture segment is expected to grow at a CAGR of 10.70% during the forecast period. The Xe architecture is built on the series, making it secure to approach and integrate into the data centers. The Intel Xe GPU architecture has introduced new instructions to leverage the GPU system at the core. The new development, advancement, and new technology insights are slightly transforming and building space in the data center GPU industries globally.

Data Center GPU Market Companies

- NVIDIA Corporation

- Intel Corporation

- Advanced Micro Devices, Inc

- Samsung Electronics Co., Ltd.

- Micron Technology, Inc.

- Advantech Co. Ltd.

- Alphabet Inc.

- Broadcom Inc. Fujitsu Ltd,

- Gigabyte Technology Co. Ltd.

Recent Developments

- In November 2023, the AMD Ryzen Embedded 7000 Series processor family, optimized for the high-performance demands of industrial markets, was unveiled by AMD today at Smart Production Solutions 2023. By fusing integrated Radeon graphics with "Zen 4" architecture, Ryzen Embedded 7000 Series processors offer performance and functionality that was not previously available for the embedded market.

- In November 2023, Imagination Technologies introduced IMG DXD, the first model in a new range of DirectX-compatible high-performance GPU IP. The new IMG DXD has the API coverage to run well-known PC games in addition to other Windows-based apps and mobile games, starting with a hardware-based version of DirectX 11.

- In September 2023, Cloudflare announced the deployment of Nvidia GPUs at the edge for generative AI inference in up to 300 data centers. With this deployment, Cloudflare aims to improve speed and performance while ensuring the delivery of high-quality services to its customers

- In June 2023, Intel introduced its latest data center GPU, the Max-1550, specifically designed for AI applications. This GPU offers excellent scalability, power efficiency, and impressive performance, particularly in deep learning and inference tasks. It supports various connectivity options, including PCIe 4.0 and NVLink 2.0, providing flexibility for different system configurations.

Segments Covered in the Report

By GPU Type

- Discrete GPUs

- Integrated GPUs

- Hybrid GPUs

By Application

- AI and Machine Learning (ML)

- High-Performance Computing (HPC)

- Graphics Rendering

- Cloud Gaming

- Data Analytics and Big Data

- Virtualization

- Blockchain and Cryptocurrency Mining

- Enterprise Applications

By End-User

- Cloud Service Providers (CSPs)

- Telecommunications

- Financial Services

- Healthcare

- Retail

- Manufacturing

- Media & Entertainment

- Education & Research

By Data Center Type

- Hyperscale Data Centers

- Enterprise Data Centers

- Colocation Data Centers

By GPU Architecture

- CUDA (NVIDIA's GPU Architecture)

- RDNA and CDNA (AMD's GPU Architecture)

- Xe Architecture

By Geography

- North America

- Asia Pacific

- Europe

- Latin America

- Middle East & Africa

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

Frequently Asked Questions

Ask For Sample

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

Get a Sample

Get a Sample

Table Of Content

Table Of Content

sales@precedenceresearch.com

sales@precedenceresearch.com

+1 804-441-9344

+1 804-441-9344

Schedule a Meeting

Schedule a Meeting