What is Data Center AI Chips Market Size?

The global data center AI chips market featuring industry growth projections, key chip types, competitive landscape, and the role of cutting-edge processors in powering AI-driven digital transformation. The data center AI chips market is witnessing strong overall growth, driven by rapid adoption of generative AI workloads, rising cloud investments, and increasing demand for high-performance, energy-efficient processing solutions.

Market Highlights

- North America led the data center AI chips market with approximately 35% share in the global market in 2025.

- The Asia Pacific is estimated to expand the fastest CAGR between 2026 and 2035.

- By chip/product type, the CPUs segment contributed the largest market share in 2025.

- By chip/product type, the GPUs segment is growing at the highest CAGR between 2026 and 2035.

- By data center type/size, the hyperscale data centers segment accounted for the biggest market share in 2025.

- By end-user/industry vertical, the IT and telecom segment held approximate 35% of market share in 2025.

- By end-user industry vertical, the healthcare and automotive segment is expected to grow at a fastest CAGR between 2026 and 2035.

What are the Main Factors Contributing to the Current Development of AI Chips?

Through a specialized processor type known as a data centre AI chip, large-scale computing environments can deliver complex computations required for training and running AI models faster and more efficiently through accelerated training and inference workloads.

As hyperscale are currently transitioning to more efficient, better performing architectures that can accommodate the rapid growth of generative AI LLMs, and expansion of their cloud-based automation needs, the industry is accelerating the introduction of new GPU, ASIC, and AI accelerator products that address the need to increase both performance and efficiency, both from an energy consumption perspective and from increasing chip density. The emergence and continuing growth of liquid cooling technologies, chiplet-based designs, and the use of advanced packaging technologies are all driving the next generation of AI Infrastructure.

As governments increase investment insemiconductor manufacturing facilities, and improved security measures are being developed for processing secure data, the continued adoption of on-premise AI training clusters, edge-to-core integration, and optimized computing density will support long-term scalability in the data center AI chips market.

The Data Center AI Chips Market Is Influenced by a Variety of Factors

- Domain-Specific Accelerators: The increasing use of AI chips that are designed for specific application workloads, such as large language model training, inference, and recommendation processing, will improve compute efficiency, reduce latency, and enable hyperscale operators to better handle the complexity of rapidly evolving AI models within their data centers.

- Energy-Efficient Chip Architectures: Due to the rise in the power requirements of data centers, there is increasing interest in the development of ultra-energy-efficient AI chip designs that allow data centers to significantly reduce their energy consumption, while simultaneously improving the thermal stability and supporting their sustainability goals, without sacrificing performance during ongoing, large-scale deployment of AI.

- Chiplet and 3D Packaging Designs: The transition to chiplet-based designs and the increased adoption of advanced 3D packaging designs have allowed for increased bandwidth, compute density, and scalability, allowing data centers to process AI faster than ever and enabling the integration of flexible and modular high-performance architectures.

- Liquid-Cooling-Compatible Chips: As the amount of heat produced by AI workloads increases, demand is growing for chips that are optimized for liquid and immersion cooling, providing optimal performance, minimizing the risk of overheating, and allowing for reliable completion of demanding AI training tasks.

What Role Do Ground-Breaking Chip Designs Play in the Evolution of the Data Center AI Chips Market?

The ever-increasing demand for greater computational speed and efficiency, combined with the mounting complexity of Artificial Intelligencemodels, made way for the regenerative proliferation of next-generation architectures enabled by advanced heterogeneous computing capabilities such as CPU/GPU combinations, specialized processors, memory-centric design elements, etc., which allow the processing of parallelized workloads with the least amount of latency possible.

- In October 2025, Qualcomm was among the leaders in this technological expansion by introducing the AI200 and AI250, two AI accelerators designed for large-scale AI inference workloads, specifically for use in data centres.

On the infrastructure side, the delivery of power to Data Centres has also undergone a significant upgrade. In May 2025, Infineon Technologies, in collaboration with NVIDIA, developed the 800V High Voltage Direct Current (HVDC) architecture, which eliminates the current 54V or 48V Data Centre power systems and instead provides centralised, high-efficiency power distribution to GPUs directly.This upgrade allows data centres to run significantly denser AI workloads, will reduce energy loss, and will guarantee a reliable supply to servers regardless of how much power they draw, from several hundred kilowatts upwards. These combined advancements in Next Generation AI accelerators and power infrastructure will allow data centres to run ever-larger models, deliver high-performing AI inference, and quickly and sustainably scale to meet the growth in future AI demand.

Data Center AI Chips Market Outlook

Countries like the U.S., EU, Japan, and South Korea are expanding their Data Centre regions to support the increased use of optimized chips throughout their borders.

The increase in the demand for Data Centre electricity usage has led to the implementation of liquid cooling, upgraded substations, and an increase in higher-density racks that result in the need for more energy-efficient AI Accelerators

The rapidly increasing demand for training and inference of state-of-the-art AI Models is driving the need for Data Centres to utilise Specialized AI Chips such as GPUs, TPUs, and Custom ASICs to remain efficient.

U.S. and EU Energy Regulatory bodies are warning of the severe pressures being placed on the Grid due to the increasing amount of energy being consumed by computational loads, which is driving the adoption of energy-optimized AI Chips to reduce per-workload energy consumption and improve the sustainability of Data Centres.

Market Scope

| Report Coverage | Details |

| Dominating Region | North America |

| Fastest Growing Region | Asia Pacific |

| Base Year | 2025 |

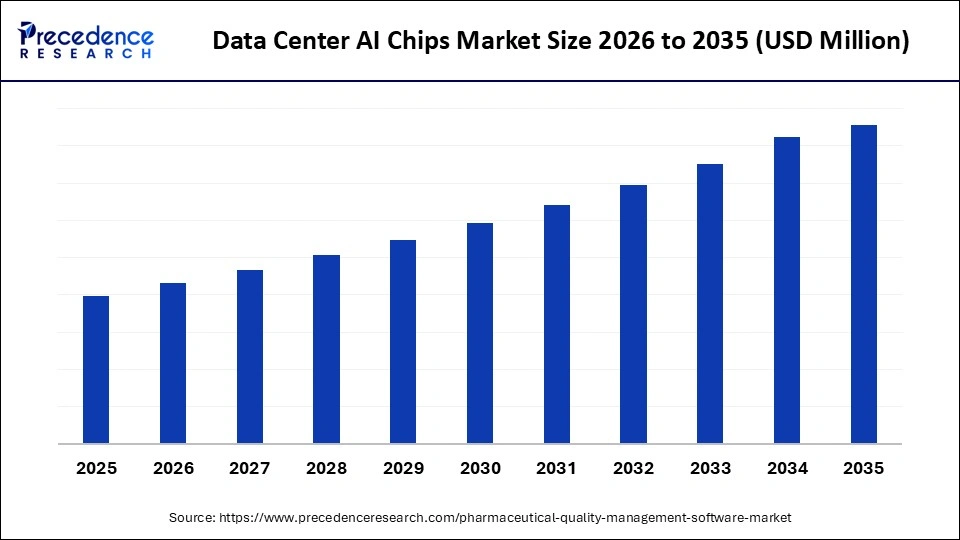

| Forecast Period | 2026 to 2035 |

| Segments Covered | Service Type, Building Type/End-User, Building Systems Focus, and Region |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Data Center AI Chips MarketSegment Insights

Chip / Product Type Insights

CPUs: This segment remains the dominant chip type because they are foundational to all existing data center architectures and have the largest installed base globally. CPUs can also run a wide range of workloads, orchestrate the required data center operations, and handle distributed processing tasks. Thus, CPUs are indispensable to enterprises, even as highly specialized AI chips are rapidly augmenting them. Enterprises continue to rely on x86-based CPUs to manage systems, create virtual machines, allocate memory, and perform other basic computing functions that enable the use of AI accelerators.

GPUs: This segment is experiencing the fastest growth in the data center AI chips market, driven by the exponential increase in models and workloads required to train and perform inference on the latest models. Parallel processing enables GPUs to achieve significantly better performance than CPUs when training Large Language Models (LLMs), generating generative AI content, and performing deep learning operations. AWS and other large-scale cloud providers are deploying more GPUs than any other chip type due to rapid advances in High-Bandwidth Memory (HBM) and advanced interconnection technologies, making the GPU chip segment the fastest-growing chip category.

Data Center Type / Size Insights

Hyperscale Data Centers: This segment is dominant across all other data center types in 2025, as these centers have the largest infrastructure footprint, with global coverage (increasingly through multiple data centers). Hyperscale data centers can also support AI workloads at a scale not previously possible (across clouds). Additionally, hyperscale data centers use high-density racks, extreme cooling technologies, and proprietary chip designs, making them the leading consumers of AI chips.

The cloud marketplace continues to grow and develop methods for delivering and manipulating data at scale. As such, hyperscale facilities have seen the steepest growth in AI-related workloads. With the exponential growth of AI, the demand for hyperscale data centers has surpassed that of any other segment of the marketplace, and the need for an ever-increasing number of GPU nodes, advanced high-speed networks, and custom chips (TPUs and NPUs) makes the hyperscale segment the fastest-growing within the cloud marketplace.

Colocation Data Centers: The colocation data centers segment holds a substantial and accelerating share of the data center AI chips market as enterprises shift away from high-cost on-premises deployments toward shared, professionally managed, and AI-ready digital infrastructure. In practice, colocation operators have begun designing purpose-built high-density halls that support GPU clusters with power densities of 40 80 kW per rack, a requirement now common for workloads involving LLM training, inference at scale, and multimodal analytics. Operators in the United States, Germany, Singapore, and Japan have already announced expansions of liquid cooling corridors and power-dense zones to host NVIDIA H100/H200, AMD MI300X, and emerging custom ASIC-based clusters, reflecting a strong supply-side commitment to AI-specific colocation demand.

Growth has further accelerated because colocation providers are becoming strategic partners in enterprise AI adoption. Many enterprises adopt AI chips first through colocation environments because they gain proximity to cloud on-ramps, dark-fiber interconnections, and edge facilities without major capital expenditure. In 2025, several colocation operators in North America and Europe integrated direct-to-chip liquid cooling and high-voltage DC power architectures to accommodate AI chip clusters.

This trend aligns with the rising thermal and electrical demands of modern accelerators. Taken together, the segment is evolving from simple space-and-power leasing to becoming a core infrastructure layer that enables enterprises to operationalize AI models, manage higher compute density, and scale workloads at predictable cost.

End-User / Industry Vertical Insights

IT & Telecom: IT & Telecommunications is leading the data center AI chips market due to its strong correlation with cloud infrastructure, data center ownership, and the need to Scale Compute Resources. As a result, IT & telecommunications is paving the way for the mass adoption of AI technologies in areas such as workload orchestration, network automation, traffic prediction/forecasting, and cloud-based service delivery. With the current deployment of a large number of GPUs, CPUs, and Accelerators, all located in global data centres, the IT and telecommunications industries account for the largest consumption of AI Chips.

Healthcare: The healthcare and automotive industries are growing rapidly as they adopt AI workloads, including AI-based medical imaging, diagnostic automation, AI-enabled drug modeling, autonomous drive compute architectures, and vehicle-to-cloud processing. Increased investment in high-performance computing clusters, edge inference systems, and real-time AI decision support frameworks is further assisting in accelerating the implementation of advanced AI chip solutions in the healthcare and automotive sectors.

Data Center AI Chips MarketRegional Insights

The North American region continues to dominate the data centre AI chip market. Thanks to an abundance of hyperscale companies and advanced chip designers, as well as a robust ecosystem of cloud infrastructure operators, North America has become home to the most mature AI computing ecosystem in the world. Rapid innovation in AI accelerator technology has been accompanied by large-scale adoption across major data centre regions like Northern Virginia, Oregon, and California's Silicon Valley.

The combination of strong access to capital, robust planning for access to power, and the close integration of software and hardware development has resulted in rapid adoption of next-generation GPUs, TPUs, and custom accelerators. At the same time, North America plays the primary role in the regulatory landscape governing the export of advanced chips, further solidifying its position as the predominant site for the initial training of AI models and the expansion of high-density computing resources.

The United States dominates this region due to its large hyperscale cloud footprints, the depth of its semiconductor R&D capabilities, and the consistent rollout of AI-dense server clusters. The data centre hubs found across the U.S. feature a combination of high-capacity fibre-optic networks, abundant power sources, and a skilled engineering workforce, making the U.S. the central hub for both developing and scaling frontier AI computers.

Asia Pacific is the fastest-growing region for Data-Center for AI Chip Technology compared to other regions because of its growing digital programs, investments in cloud, and a large number of businesses adopting artificial intelligence. Countries across the Asia Pacific have created large-scale compute hubs, upgraded power availability, and modernized their network backbones to support large-scale graphics processing units and accelerators. Government programs, semiconductor technology development, and the developing of local artificial intelligence ecosystems have motivated operators to create large-scale hyperscale campuses in Countries such as India, China, Japan, Singapore, and Australia. Therefore, the coordination between government programs and cloud demand makes the Asia Pacific the fastest-growing region for new builds in the AI computing industry.

China Data Centre AI Chips Market Trends:

China is at the top of the Asia-Pacific region due to its vast Sponsored Digital Infrastructure, rapid Data Center construction, and increased Demand from Local Cloud Providers and AI companies. China has consistently increased its GPU Capacity through building Hyperscale campuses, developing more Chips in China, and investing heavily in National Compute Programs. Therefore, China is the most dominant, resource-rich, and influential market in the Asia-Pacific region.

- In October 2025, China Unicom launched US$390m AI data centre powered by 23,000 domestically manufactured processors with domestic chips. The company develops custom chips for cloud computing and AI applications.

The European data center AI chip market is growing steadily and strategically as European countries continue to invest heavily in high-density compute infrastructure while balancing energy, regulatory, and sustainability issues. Among the key hub cities are Frankfurt, Paris, Amsterdam, and Dublin, where these cities are working to increase the availability of power, fiber routes, and colocation facility capacity, with Artificial Intelligence capabilities.

The EU's various digital programs and national initiatives are providing investment funding to European countries for sovereign compute and semiconductors, as well as efficient, high-performance data centres. The region is working to build reliable, low-latency infrastructure that can accommodate rapidly growing enterprise artificial intelligence workloads in sectors like manufacturing, automotive, financial services, and public research, while adhering to strict environmental and data protection rules.

Germany Data Centre AI Chips Market Trends

Germany remains the largest market for data centres and AI infrastructure in Europe, supported by one of the continent's most concentrated and mature data-centre clusters in the Frankfurt metropolitan area. The Frankfurt Rhine-Main region hosts hyperscale campuses operated by global providers such as Equinix, Digital Realty, and CyrusOne, making it the core interconnection hub of continental Europe and a central landing point for major fibre routes. Germany's deep engineering culture and strong industrial ecosystem, particularly in automotive, manufacturing automation, and enterprise software, are accelerating demand for AI-optimised chips to support computer vision, predictive maintenance, digital twins, and other industrial AI workloads.

The country is actively transitioning toward high-density, grid-efficient data centre designs that can support large clusters of GPU- and accelerator-based compute. Federal and state-level initiatives promoting energy-efficient digital infrastructure, combined with ongoing research investments from institutions such as Fraunhofer and leading universities, are strengthening Germany's capacity to develop next-generation AI compute capabilities.

The Middle East & Africa are emerging quickly into the data centre AI chips market. This region has seen a surge in public and private-sector investments in AI-capable terminal infrastructure to support the deployment of AI workloads across a wide range of industry sectors. Prime Ministers and other officials in government agencies across the region are directing greater capital investment into AI-created infrastructure.

Many of these developments are occurring in prime locations and are also benefiting from favourable government policy and support from major global hyperscale companies, including Microsoft, IBM, and Amazon. This region's growth is largely driven by new development projects and a greater number of AI-based workloads deployed to the cloud via edge computing solutions.

The UAE is the leader in this region, with many ambitious state-supported initiatives, a strong focus on developing very large-scale GPU campus developments, and strong partnerships with global cloud and semiconductor providers. As a result of the very substantial levels of investment being made by both Abu Dhabi and Dubai for the development of sovereign computing capacity, extremely large-scale GPU Very Large Scale Integration (VLSI) infrastructures, along with many new data centre developments located in the Digital Innovation Zone, the UAE has emerged as the primary hub for advanced AI deployments within the MEA region.

Latin America is continuing its pathway to become an improving strength in growth, driven by the rise of Cloud Adoption and the expansion of its digital platforms to support next-gen AI workloads. Many telco providers and governments within the region may invest in rapidly modernizing their data centers, integrating renewable power into data centers, and building their Network Infrastructure to support the deployment of high-density GPU/accelerator solutions throughout the region. Multinational cloud providers are creating availability zones throughout their key markets, reducing latency and creating the opportunity to develop AI-training models within that market area. While growth will vary by country, demand for AI-based financial services, e-commerce, smart cities, and the Ministry of Digital Public will remain steady in Latin America.

Brazil Data Centre AI Chips Market Trends

Brazil has emerged as the leading market in Latin America for AI-ready data center infrastructure, driven by sustained government incentives, a maturing cloud ecosystem, and rapid enterprise digital transformation. The country hosts the region's highest concentration of colocation facilities and cyber-task architectures, supported by expanding hyperscale footprints from AWS, Microsoft Azure, and Google Cloud in Sao Paulo and other metropolitan hubs. These deployments are reinforced by Brazil's ongoing national digitalization agenda, which prioritizes AI adoption across public services, finance, agriculture, and industrial automation.

Brazil's fiber-optic backbone development and the increasing deployment of FEMS (Facilities Energy Management Systems) have strengthened its ability to support dense AI compute clusters that require consistent power delivery and high-bandwidth connectivity. As enterprises adopt cloud-native operations, generative AI tools, and high-performance analytics, demand for advanced AI chips continues to rise.

Data Center AI Chips Market Companies

H100 and Blackwell B200 GPUs powering large-scale AI training.

Instinct MI300X accelerators optimized for HPC and generative AI.

Gaudi 3 accelerators offering efficient training and inference performance.

TPU v5p processors enabling high-performance cloud-scale AI workloads.

Trainium2 and Inferentia2 chips built for scalable GenAI in the cloud.

Maia 100 data-center AI chip designed for large model training.

Manufactures advanced AI chips for global leaders like NVIDIA and AMD.

Tomahawk and Jericho networking ASICs supporting large AI cluster connectivity.

High-bandwidth memory (HBM) solutions powering next-gen AI accelerators.

Focuses on consumer SoCs; limited presence in data-center AI chips.

Recent Developments and Breakthroughs in the Data center AI chips Market

- In November 2025, Microsoft launched its Arm-based Cobalt 200 CPU for Azure, which delivers a 50% performance boost over its predecessor, optimized for cloud workloads and improved data-center efficiency and security. (Source: https://www.datacenterknowledge.com)

- In November 2025, Qualcomm entered the data-center AI chip market with its new AI200 and AI250 accelerator cards and rack systems, aiming to compete with NVIDIA in inference workloads and offering lower power consumption plus high memory bandwidth. (Source: https://www.cxodigitalpulse.com)

- In October 2025, Broadcom unveiled its new networking chip, Thor Ultra, designed to interconnect massive AI compute clusters, challenging NVIDIA's dominance in data-center networking for large-scale AI deployments. (Source:https://www.thehindu.com)

- In October 2025, Arm joined the Open Compute Project (OCP) board and introduced a vendor-neutral chiplet standard (FCSA) to enable open, modular AI data centers and reduce dependency on proprietary server designs. (Source: https://www.techzine.eu)

Data Center AI Chips MarketSegments Covered in the Report

By Chip / Product Type

- CPU

- GPU

- ASIC

- FPGA

- AI Accelerators (NPUs, TPUs, custom accelerators)

By Data Center Type / Size

- Hyperscale Data Centers

- Enterprise / Large Data Centers

- Edge / Micro Data Centers

- Colocation Data Centers

By End-Use/ Industry Vertical

- IT & Telecom

- BFSI

- Healthcare

- Retail & E-commerce

- Manufacturing

- Government & Defense

- Automotive

- Energy & Utilities

By Region

- North America

- Europe

- Asia-Pacific

- Latin America

- Middle East & Africa

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

Frequently Asked Questions

Ask For Sample

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

Get a Sample

Get a Sample

Table Of Content

Table Of Content

sales@precedenceresearch.com

sales@precedenceresearch.com

+1 804-441-9344

+1 804-441-9344

Schedule a Meeting

Schedule a Meeting