What is AI Processors Market Size?

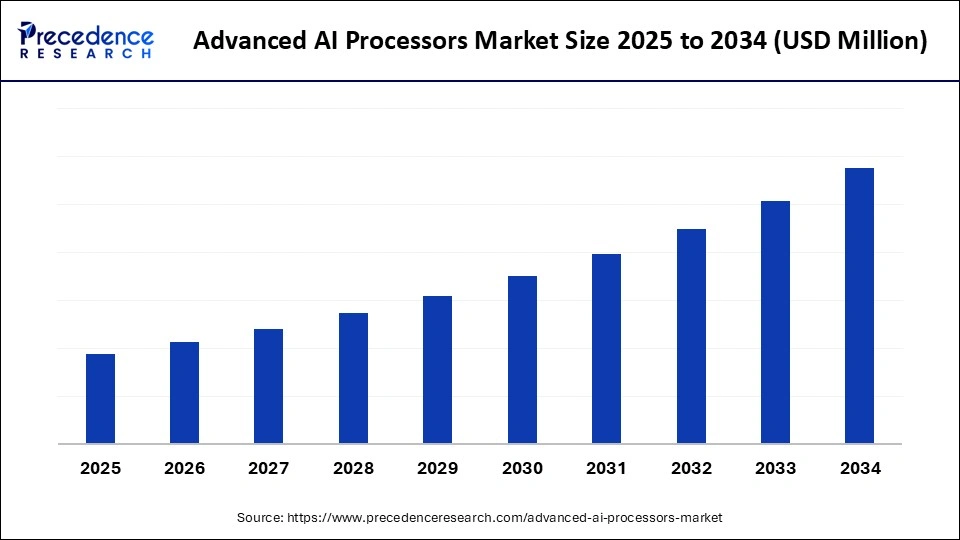

The global advanced AI processors market is projected to experience robust growth as demand mounts for next-generation compute solutions across cloud, edge, and enterprise systems. Advanced AI processors market is driven by increasing demand for high-performance computing, AI-powered applications, and energy-efficient data processing across sectors such as automotive, healthcare, and cloud computing.

Market Highlights

- By region, North America dominated the market with the largest share of 40% in 2024.

- By region, the Asia Pacific is expected to grow at the fastest rate during the forecasted years.

- By processor type, the graphics processing units (GPUs) segment led the market, holding a 60% share in 2024.

- By processor type, the application-specific integrated circuits (ASICs) segment is expected to grow at the fastest CAGR over the forecast period.

- By application, the deep learning segment captured a 45% revenue share in 2024.

- By application, the natural language processing segment is expected to grow at the fastest rate in the market over the forecast period.

- By end-user industry, the information technology & telecommunications segment led the market while holding a 35% share in 2024.

- By end-user industry, the healthcare segment is expected to show considerable growth in the market over the forecast period.

- By deployment type, the cloud-based AI processors segment held a 50% share in the market in 2024.

- By deployment type, the edge-based AI processors segment is expected to show the fastest growth in the market over the forecast period.

What are Advanced AI Processors?

The growth of AI applications in industries has intensified the demand for high-performance and energy-efficient computing systems that are able to handle real-time analytics, predictive modeling, and other complex computations required by neural networks. Compared to conventional processors, advanced AI processors are specifically designed to handle complex processes associated with deep learning, neural network training, natural language processing, and computer vision. Such categories of processors are Graphics Processing Units (GPUs), Application-Specific Integrated Circuits (ASICs), Field-Programmable Gate Arrays (FPGAs), and AI-optimized Central Processing Units (CPUs).

These processors are highly efficient in terms of processing speed, efficiency, and parallel computation elements, which allows organizations to process large amounts of data in real-time. As the number of AI-driven applications in fields such as healthcare, autonomous vehicles, robotics, fintech, and cloud computing continues to grow exponentially, the world has become overwhelmed with demands for highly efficient AI processors. The creation of digital infrastructure, government initiatives, and the growing importance of edge computing drive market growth.

Advanced AI Processors Market Outlook

- Market Growth Overview: The advanced AI processors market is poised for rapid growth between 2025 and 2034, driven by widespread AI adoption and the growing need for high-performance computing. Continuous advancements in GPUs, ASICs, and FPGAs are enhancing the efficiency of deep learning, cloud, and edge AI applications.

- Global Expansion: Expansion in emerging regions supports the growth of the market by opening up new demand centers driven by digital transformation, smart infrastructure, and government-backed AI initiatives. As industries in these regions adopt AI technologies across various sectors, including healthcare, agriculture, and manufacturing, the demand for cost-effective, scalable AI processors increases significantly, driving market growth.

- Key Investors: Corporate R&D, venture capital firms, and government-backed AI initiatives are heavily investing in processor innovation. Major players, including NVIDIA, Intel, AMD, Huawei, and Google, are focusing on developing AI chips for edge computing and cloud-based solutions, driving significant investments.

- Startup Ecosystem: A vibrant startup landscape is fueling innovation in the AI processor space. Companies like Cerebras, Graphcore, and Groq are developing specialized solutions in deep learning and edge AI, supported by substantial funding and strategic partnerships.

What Factors are Boosting the Growth of the Advanced AI Processors Market?

- Technological Advancements: Processors are also improving processing speed, parallel computation, and consuming less power through continuous innovation in GPU, ASIC, and FPGA architectures, which enable AI processors to support more advanced applications and process exponentially growing datasets.

- IoT Growth and Edge Computing: The increasing growth of edge AI and IoT devices requires low-latency, energy-efficient AI processors to process and make decisions in real-time at the device level, eliminating the need for centralized computing resources.

- Cloud Investments: Investments in cloud computing infrastructure and data center infrastructure are increasingly straining advanced AI processors, enabling them to serve large-scale AI workloads, train models significantly faster, and execute real-time analytics at a higher level of performance and scalability.

Key Technological Shifts in the Advanced AI Processors Market

The rapid integration of artificial intelligence across industries is transforming the advanced AI processors market, creating a growing demand for specialized computing solutions. The rise of edge AI, particularly in autonomous vehicles, smart devices, and industrial automation, has underscored the need for energy-efficient, low-latency processors that enable real-time decision-making. AI accelerators are also becoming integral to cloud platforms, supporting the growth of AI-as-a-Service (AIaaS) and enabling scalable adoption for businesses. Additionally, the expansion of government initiatives, increased R&D investments, and competitive innovation among major semiconductor firms are accelerating the development of next-generation AI chips.

Market Scope

| Report Coverage | Details |

| Dominating Region | North America |

| Fastest Growing Region | Asia Pacific |

| Base Year | 2025 |

| Forecast Period | 2025 to 2034 |

| Segments Covered | Processor Type, Application, End-User Industry, Deployment Type, and Region |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Market Dynamics

Drivers

Rising Demand for AI Applications Across Industries

The increasing use of artificial intelligence in various industries is a significant driving force behind the growth of the advanced AI processors market. Other industries that are increasingly embracing AI in significant ways include the automotive, healthcare, financial, and retail industries, to improve efficiency, decision-making, and customer experience. The use of AI processors in cars can enable real-time processing of data on autonomous vehicles, which can be applied to navigation, safety control, and predictive maintenance in the automobile industry.

Advanced AI processors find use in healthcare to facilitate robotic surgeries, medical imaging, and drug discovery, with a focus on processing complex datasets in a non-lagging and precise manner. Financial institutions utilize AI processors to detect fraud and manage risks, while algorithmic trading and retail companies employ AI processors to provide personalized suggestions, optimize inventory, and forecast demand.

Restraint

Supply Chain Disruptions and Chip Shortages

Geopolitical tension, trade limitations, and import and export restrictions affect the timely delivery of high-demand materials, particularly silicon wafers and other rare chip materials. Delays during manufacturing due to natural disasters, factory closures, or global events like lockdowns only intensify production constraints. The extreme complexity of fabricating AI processors, combined with the inability to produce advanced nodes in large quantities, creates bottlenecks in the market development process. Moreover, the changes in prices of raw materials and the rivalry over the limited availability of semiconductors by technology industries raise the expenses of production and postpone product supply. The issues affect the timely delivery of AI processors, also influencing pricing, R&D deployment, and adoption rates.

Opportunity

Expansion of Edge AI and Low-Power Processors

The major opportunity in the advanced AI processors market is that edge AI computing is growing at a significant pace; instead of using centralized cloud computing, the data processing is brought closer to the origin. Edge AI has the potential to reduce latency by dynamically computing data, thereby enabling the feasibility of making real-time decisions that are useful for autonomous vehicles, robotics, smart cameras, and industrial automation. Such a localized approach also minimizes the bandwidth consumed and reduces the need for high-speed internet connections, making AI solutions more efficient and resilient.Furthermore, edge computing enhances the privacy and security of data, as sensitive information does not need to be sent to a remote server for processing. It is one of the potential growth prospects for AI processor vendors globally, as additional sectors are implementing edge AI within their businesses.

Segment Insights

Processor Type Insights

What Made GPUs the Dominant Segment in the Advanced AI Processors Market?

The graphics processing units (GPUs) segment dominated the market while holding a 60% share in 2024 due to their high performance and computational power. GPUs are especially suitable for training and inference of deep learning models, allowing for the processing of large datasets faster than traditional CPUs. They are also versatile and are able to handle various types of AI workloads, including natural language processing, computer vision, and autonomous systems. Also, key technology suppliers like NVIDIA and AMD have been constantly improving the architecture of the GPUs to achieve better energy efficiency, memory bandwidth, and AI-optimized performance. The increased use of AI in cloud computing, self-driving cars, medical, and financial applications has further contributed to the demand for GPUs.

The application-specific integrated circuits (ASICs) segment is expected to grow at the highest CAGR over the forecast period due to their ability to offer high optimality and application-specific performance to the AI workloads. ASICs are energy-efficient and faster at computation compared to general-purpose GPUs, and are designed specifically to execute particular applications, such as neural network inference, cryptocurrency mining, or edge AI. The growing use of AI in data centers, self-driving cars, and IoT devices is driving the need for ASICs with specialized solutions and low latency. The implementation of ASIC is even faster, considering the technological advancements and the growth of investment in creating specific chips by major semiconductor companies.

Application Insights

Why Did the Deep Learning Segment Lead the Market in 2024?

The deep learning segment led the advanced AI processors market with a 45% share in 2024. This is primarily due to its high computational intensity and broad industrial adoption. Deep learning models, such as those used in image recognition, autonomous driving, predictive analytics, and recommendation systems, require massive parallel processing capabilities and the ability to handle large-scale data. Advanced processors, such as GPUs, TPUs, and specialized AI accelerators best meet these needs.

Additionally, continuous advancements in neural network architectures (e.g., convolutional and recurrent neural networks) have significantly improved model accuracy and efficiency, further accelerating adoption. The surge in AI-driven automation across sectors such as healthcare, finance, retail, and autonomous systems, alongside strong investments in AI R&D and cloud-based AI platforms, has reinforced the demand for high-performance processors tailored to deep learning workloads.

The natural language processing segment is expected to grow at the fastest rate in the upcoming period due to the surging demand for real-time, AI-driven language understanding and communication technologies. NLP applications, such as chatbots, virtual assistants, sentiment analysis, machine translation, and voice recognition, require large-scale processing of complex language models like transformers (e.g., GPT, BERT), which are highly computationally intensive.

The segment growth is further driven by the widespread integration of NLP tools across customer service, healthcare documentation, financial services, and content moderation, all of which rely on high-performance AI processors to deliver accurate, real-time responses. Moreover, advancements in multilingual models and the adoption of edge and cloud-based NLP solutions have contributed to the segment's rapid expansion, enabling scalable, low-latency deployment across diverse industries.

End-User Industry Insights

Why Did the Information Technology & Telecommunications Segment Dominate the Market in 2024?

The information technology & telecommunications segment dominated the market while holding a 35% share in 2024. FPGAs, ASICs, and GPUs are fundamental building blocks of cloud computing technology, data centers, and telecommunication networks, which can handle vast amounts of data in real-time at the data center. Applications that require high-speed and energy-efficient processors include predictive analytics, network optimization, cybersecurity, and AI-based virtual assistants, as they require low-latency and accurate output.

The demand has also increased due to the development of 5G networks, cloud-based solutions, and enterprise AI solutions. The AI infrastructure is also costly for large IT and telecommunications companies to expand their computational capacity and streamline the efficiency and execution of machine learning tasks.

The healthcare segment is expected to grow at a significant CAGR over the forecast period. This is primarily due to the growing adoption of AI technologies in the healthcare industry, particularly in applications such as diagnostics, medical imaging, robotic surgery, and personalized medicine. Advanced AI processors can be used to analyze large clinical datasets, genome sequencing, predictive models, and patient risk analysis in real-time, thereby enhancing the speed and accuracy of diagnosis and treatment planning.

The increase in investment in AI-based healthcare applications, the growing demand for efficient medical services among patients, and the implementation of AI in telemedicine applications and hospital administration systems are the major growth factors. Additionally, the adoption is further enhanced by the need for high-performance processors that consume less energy to implement large-scale neural networks and deep learning models in healthcare research and drug discovery.

Deployment Type Insights

Why Did the Cloud-Based AI Processors Segment Hold the Largest Market Share in 2024?

The cloud-based AI processors segment held a 50% share of the advanced AI processors market in 2024, as cloud-based AI processors enable companies to scale their data processing operations, train more complex deep learning applications, and apply AI applications with minimal physical infrastructure. These processors offer scalable computing, performance, parallel processing, and centralized control, enabling organizations to efficiently compute large datasets. These sectors, which have been adopting cloud AI, include the IT, finance, healthcare, and retail industries, utilizing predictive analytics, virtual assistants, fraud prevention, and personalized suggestions. The ease, affordability, and availability of cloud-based AI processors have contributed to their continued popularity in businesses worldwide, making them market leaders.

The edge-based AI processors segment is expected to grow at a rapid pace in the coming years because of the growing demand for real-time and low-latency AI processors that are brought closer to the data. Edge AI processors are employed in smart devices such as autonomous vehicles, drones, smart cameras, Internet of Things (IoT) devices, and robots used in various industries. They can actually process decisions in real-time, without relying on a centralized cloud server. They are energy-efficient, small-form-factor, high-performance processors that compute data locally at the lowest bandwidth and latency.

The adoption of edge AI processors is gaining momentum due to the emergence of IoT environments, smart cities, smart medical devices, and automation of industrial environments. Additionally, there is a growing focus on data privacy, security, and regulatory compliance, which promotes the local processing of data at the edge.

Regional Insights

Why Did North America Dominate the Global Advanced AI Processors Market?

North America dominated the global market with the highest market share of 40% in 2024. This dominance is driven by a mature technology ecosystem, widespread adoption of AI solutions, and the strong presence of leading semiconductor and tech companies such as NVIDIA, AMD, Intel, and Google. These players are at the forefront of AI processor innovation, particularly in GPUs, ASICs, and AI accelerators.

The region's growth is further supported by significant R&D investments, robust government backing for AI initiatives, and widespread use of high-performance computing (HPC) across industries. A well-developed IT infrastructure, extensive cloud computing capabilities, and strong enterprise demand for AI across sectors like healthcare, automotive, finance, and telecommunications also contribute to market strength.

The U.S. is a major contributor to the North American advanced AI processors market due to a high concentration of venture capital in AI startups, strong university-industry partnerships, and advanced chip manufacturing capabilities. Additionally, supportive government policies, such as investments in AI R&D, infrastructure, and defense-related AI applications, further bolster the region's leadership in AI processor development and commercialization.

Why is Asia Pacific Considered the Fastest-Growing Region in the Advanced AI Processors Market?

Asia Pacific is expected to experience the fastest growth throughout the forecast period. The regional market growth is driven by rapid technology adoption, a growing digital ecosystem, and substantial investments in AI research and development. Countries like China, Japan, South Korea, and India are expanding the use of AI-driven applications across healthcare, automotive, finance, and intelligent manufacturing sectors. The rising deployment of cloud computing, edge AI, and IoT-based systems is fueling demand for high-performance processors such as GPUs, ASICs, and FPGAs. Market expansion is further supported by favorable government policies, national AI strategies, and growing private sector investments aimed at enhancing AI capabilities.

China is the leading contributor within the region, backed by its strong focus on AI infrastructure, HPC capabilities, and dedicated AI chip research institutions. Major Chinese tech giants like Huawei, Baidu, Alibaba, and Tencent are actively developing and deploying custom AI chipsets for cloud services, autonomous driving, and industrial automation. With expanding applications in smart cities, consumer electronics, and enterprise solutions, China continues to be a key growth engine in the Asia Pacific AI processors market.

Country-Level Investments & Funding Trends

- U.S.: The U.S. is the biggest AI processor investor with large investments in companies such as NVIDIA, Intel, as well as AMD, both by individuals and the government. The cloud AI, autonomous vehicles, and defense applications initiatives have led to growth, as well as support for AI startups by venture capital, and the development of advanced GPUs, ASICs, and edge AI processors.

- China: China is rapidly investing in AI processors, primarily focusing on local innovation through companies such as Huawei, Alibaba, and Baidu. The government has utilized programs and strategic alliances to design AI chips, cloud AI infrastructure, and edge computing, enabling the development of high-performance AI processors and accelerating their implementation in healthcare, smart cities, and autonomous systems.

- South Korea: South Korea is a region that invests greatly in AI processors with such major companies as Samsung and SK Hynix. The financed activities are based on AI-optimized memory, edge computing chips, and high-performance GPUs. The adoption of AI has accelerated with the support of the government, partnerships between state and business entities, and smart city initiatives, which are enhancing the semiconductor and AI technology ecosystem in the region.

Recent Developments

- In October 2024, AMD announced that it would begin mass-producing its MI325X AI chip, which is expected to be available in Q1 2025, and would enter the Nvidia-controlled AI processor market. They also announced the MI350 series, scheduled for release at the end of 2025, which will feature improved memory and architecture to support AI. (Source: https://www.cnbc.com)

- In September 2024, Qualcomm introduced its Snapdragon X Plus 8 core processor in the PC AI-powered single-board computer, marking the first entry into this market and competing with Intel and AMD. The chip, with support from Microsoft to be installed on the list of Copilot+ PCs, provides power-efficient AI workloads at approximately $700 each. (Source: https://www.cnbc.com)

Top Companies in the Advanced AI Processors Market & Their Offerings

Tier I: Market Leaders (40–50% cumulative share)

These companies dominate the advanced AI processors market through industry-leading hardware for AI training/inference, significant market penetration, strategic partnerships, and robust R&D driving cutting-edge innovation.

|

Company |

Contribution & Role |

|

NVIDIA Corporation |

The undisputed leader in AI processors, especially in data center training workloads; holds the largest share of the AI accelerator market with GPUs like A100, H100, and upcoming B100. |

|

Advanced Micro Devices, Inc. (AMD) |

Significant player growing fast with MI300 series GPUs targeting AI workloads with strategic wins across data centers. |

|

Intel Corporation |

Major contributor through a combination of CPUs used in AI inference and dedicated AI accelerators (e.g., Gaudi, Habana). |

Tier II: Established Players (25–35% cumulative share)

These companies have solidified their roles with focused AI chip development, enterprise/cloud partnerships, and growing deployments across inference, edge, and specialized applications.

|

Company |

Contribution & Role |

|

Google (Alphabet Inc.) |

Designs and deploys TPU (Tensor Processing Units) across its own cloud infrastructure; significant internal usage and growing influence via Google Cloud AI services. |

|

Amazon Web Services (AWS) |

Develops Trainium and Inferentia chips to power its AI/ML services; mostly used internally but growing in external cloud offerings. |

|

Qualcomm Technologies Inc. |

Provides AI chips for edge devices and smartphones; strong play in on-device AI, AI inference, and 5G AI integration. |

|

Apple Inc. |

Designs AI-focused silicon (Neural Engine) for mobile and laptop devices; while not sold externally, Apple silicon is among the most deployed edge AI processors. |

|

Broadcom Inc. |

Supplies custom AI ASICs for hyperscalers and networking, contributing to AI-driven data center infrastructure. |

Tier III: Emerging and Niche Players (10–15% cumulative share)

These players focus on custom architectures, edge AI, low-power processing, or regional deployments, gradually building revenue and niche share in the broader AI processor ecosystem.

|

Company |

Contribution & Role |

|

Tenstorrent Inc. |

AI startup led by former AMD execs, designing RISC-V-based AI chips focused on inference workloads. |

|

Mythic AI |

Develops analog compute-based edge AI processors targeting ultra-low power applications. |

|

Graphcore Ltd. |

UK-based company offering IPU (Intelligence Processing Unit); struggled with recent financial issues but known for innovation in AI compute. |

|

SambaNova Systems |

AI system provider offering full-stack AI hardware and software, targeting enterprise and government use cases. |

|

Huawei Technologies Co., Ltd. |

Develops Ascend series AI processors, mostly used in domestic Chinese deployments due to export restrictions. |

Expert Analysis on the Advanced AI Processors Market

The global advanced AI processors market is undergoing a paradigm-defining transformation, catalyzed by the exponential acceleration in AI model complexity, proliferating enterprise adoption of generative AI, and an irreversible pivot toward intelligent edge and data-centric architectures. As organizations recalibrate their digital strategies around AI-native infrastructure, the market is witnessing a structural expansion across compute layers, from hyperscale data centers to power-optimized edge deployments.

From a value creation perspective, the market is transitioning from general-purpose compute to highly specialized silicon architectures, characterized by domain-specific acceleration (e.g., GPUs, NPUs, TPUs, custom ASICs). This hardware-software co-design is becoming foundational to AI model efficiency, especially as models such as LLMs (Large Language Models) and multimodal systems increasingly challenge traditional compute scalability.

NVIDIA's asymmetric dominance, particularly in training workloads, continues to dictate industry direction, yet this concentration also underscores an emerging opportunity space, one ripe for disintermediation by challengers leveraging open standards (e.g., RISC-V), heterogenous compute, or vertical integration strategies (e.g., AWS Trainium). Meanwhile, AMD and Intel are recalibrating portfolios toward AI-dedicated silicon, with traction in inference markets and ecosystem partnerships signaling an inflection in competitive dynamics.

The edge AI processor segment is evolving into a high-growth adjacency, buoyed by autonomous systems, AIoT, smart vision, and mobile AI workloads. Here, vendors such as Qualcomm, Apple, and a cohort of fabless innovators are unlocking latent demand for low-latency, energy-efficient AI inference at the periphery of the network. Further amplifying the market's upside is the emergence of sovereign AI strategies, as nations and hyperscalers seek greater control over AI compute pipelines, the demand for in-house or localized AI silicon is set to escalate, reinforcing both supply chain resilience and architectural diversification.

Segments Covered in the Report

By Processor Type

- Graphics Processing Units (GPUs)

- Application-Specific Integrated Circuits (ASICs)

- Field-Programmable Gate Arrays (FPGAs)

- Central Processing Units (CPUs)

- Neuromorphic Processors

- Quantum Processors

By Application

- Deep Learning

- Natural Language Processing

- Computer Vision

- Robotics

- Predictive Analytics

- Autonomous Vehicles

- Edge Computing

- Others (e.g., Healthcare Diagnostics, Financial Modeling)

By End-User Industry

- Information Technology & Telecommunications

- Healthcare

- Automotive

- Retail

- Financial Services

- Media & Entertainment

- Manufacturing

- Government & Defense

- Others

By Deployment Type

- Cloud-Based AI Processors

- Edge-Based AI Processors

- Hybrid Deployment

By Region

- North America

- Europe

- Asia-Pacific

- Latin America

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

Frequently Asked Questions

Ask For Sample

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

Get a Sample

Get a Sample

Table Of Content

Table Of Content

sales@precedenceresearch.com

sales@precedenceresearch.com

+1 804-441-9344

+1 804-441-9344

Schedule a Meeting

Schedule a Meeting