What is the Vision-Language Models Market Size?

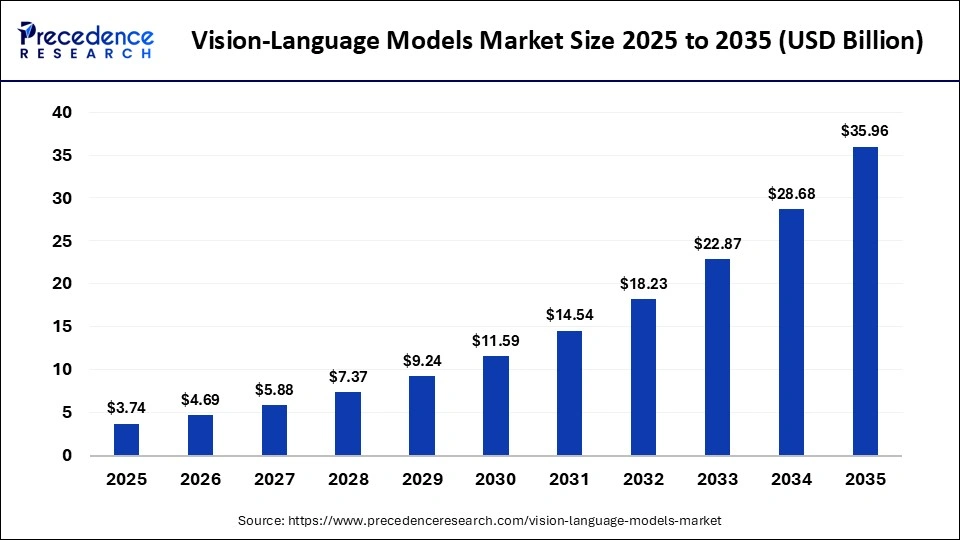

The global vision-language models market size was calculated at USD 3.74 billion in 2025 and is predicted to increase from USD 4.69 billion in 2026 to approximately USD 35.96 billion by 2035, expanding at a CAGR of 25.41% from 2026 to 2035. The market is witnessing robust growth, driven by the rapid adoption of generative AI, the growing need for enterprise automation, and increasing multimodal AI integration across various industries.

Market Highlights

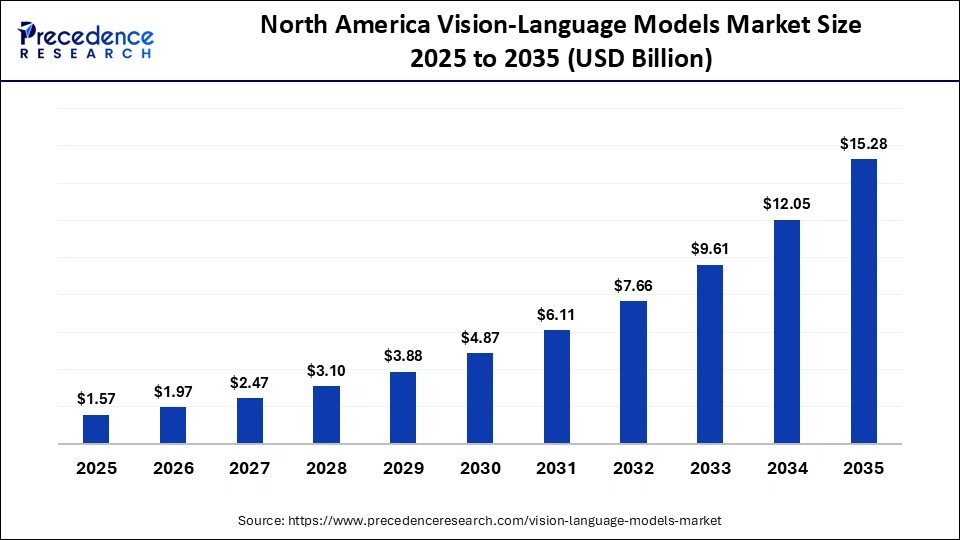

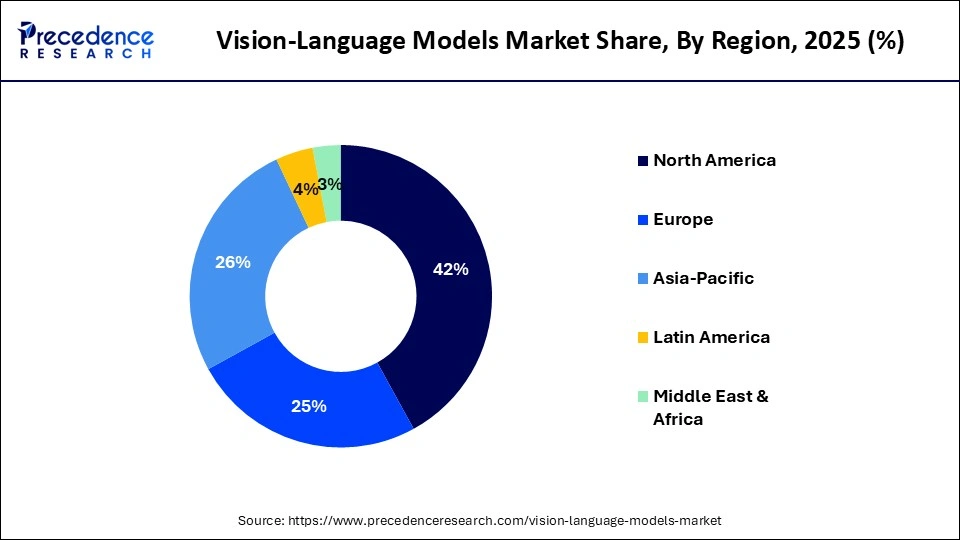

- North America dominated the vision-language models market, holding the largest share of 42% in 2025.

- Asia Pacific is expected to expand at the fastest CAGR between 2026 and 2035.

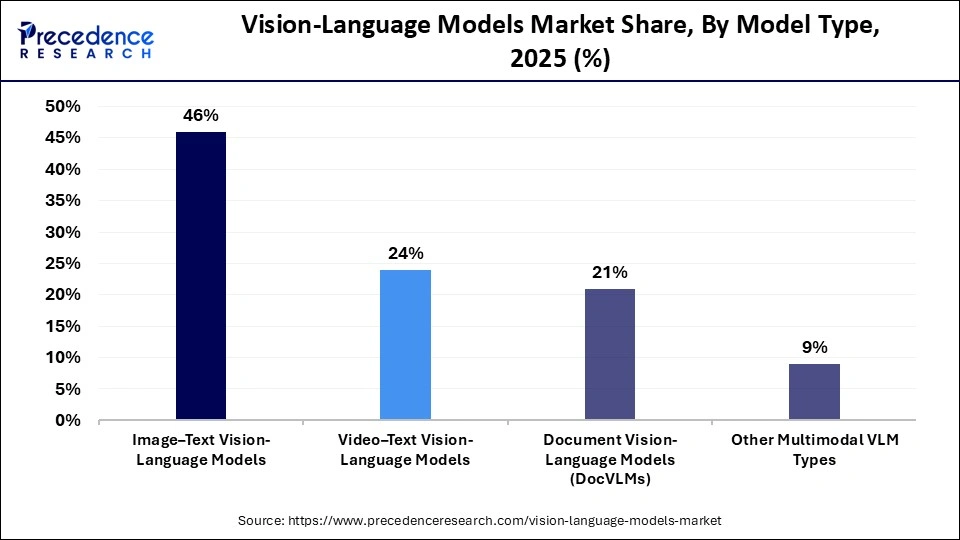

- By model type, the image-text vision-language models segment led the market while holding the largest share of approximately 46% in 2025.

- By model type, the video-text vision-language models segment is expected to grow at the fastest rate between 2026 and 2035.

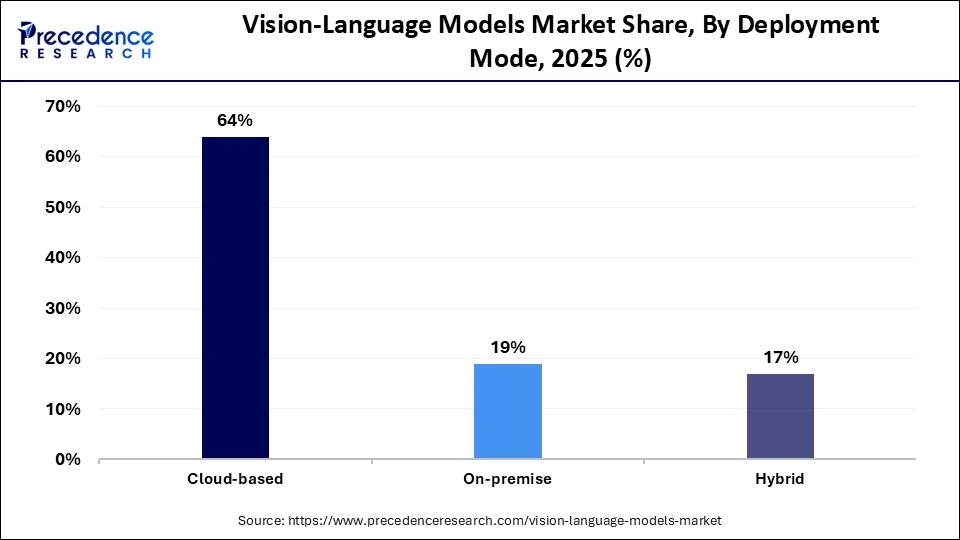

- By deployment mode, the cloud-based segment contributed the largest market share of nearly 64% in 2025.

- By deployment mode, the hybrid segment is expected to grow at a significant CAGR from 2026 to 2035.

- By industry vertical, the IT & Telecom segment held approximately 16% share of the market in 2025.

- By industry vertical, the retail & e-commerce segment is expected to grow at a significant CAGR from 2026 to 2035.

Market Overview

The global vision-language models market includes AI foundation models and multimodal model platforms capable of jointly processing and reasoning across visual inputs (images/videos) and natural language. These models enable applications such as image captioning, visual Q&A, document understanding, visual search, multimodal chatbots, content moderation, and vision-driven automation in enterprise workflows. The market spans model development platforms, training/inference infrastructure, APIs, and deployment services across cloud and edge environments.

How is AI Influencing the Vision-Language Models Market?

AI is shaping the vision-language models market, as advances in artificial intelligence enable models to understand and generate meaningful insights from both visual and textual data. Deep learning architecture allows VLMs to perform complex tasks such as image/video captioning, visual question answering, content moderation, and multimodal search with high accuracy. AI is also enabling innovations like real-time video-text understanding, cross-modal retrieval, and enhanced scene comprehension, expanding the range of applications across healthcare, e-commerce, autonomous vehicles, robotics, and entertainment. Additionally, improvements in AI model training, data efficiency, and multimodal fusion techniques are accelerating adoption and driving investment in VLM technologies globally.

What are the Major Trends in the Market?

- The surging multimodal AI needs across industries like healthcare (medical imaging), e-commerce & retail, automotive (autonomous vehicles), robotics, and content moderation are anticipated to accelerate the growth of the market during the forecast period.

- The emergence of Augmented Reality (AR) and Virtual Reality (VR) is expected to contribute to the overall growth of the market. Vision-language models (VLMs) enhance AR and VR experiences by integrating real-world visuals with contextual information.

- The rising application of VLMs in healthcare diagnostics is expected to promote the market's growth during the forecast period. Vision-language models (VLMs) assist healthcare professionals in diagnosing conditions by accurately analyzing medical images in conjunction with patient data.

- The rapid pace of digitalization in emerging nations and rising government AI initiatives is anticipated to bolster the market's expansion in the coming years.

- The rising focus of businesses in several industries on enhancing the decision-making process is expected to drive the market's expansion during the forecast period. Businesses widely use VLMs to turn vast visual data into actionable insights for improving operational efficiency.

Market Scope

| Report Coverage | Details |

| Market Size in 2025 | USD 3.74 Billion |

| Market Size in 2026 | USD 4.69 Billion |

| Market Size by 2035 | USD 35.96 Billion |

| Market Growth Rate from 2026 to 2035 | CAGR of 25.41% |

| Dominating Region | North America |

| Fastest Growing Region | Asia Pacific |

| Base Year | 2025 |

| Forecast Period | 2026 to 2035 |

| Segments Covered | Model Type, Deployment Mode, Industry Vertical, and Region |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Segment Insights

Model Type Insights

What made image-text vision-language models the dominant segment in the vision-language models market?

The image-text vision-language models segment dominated the market by holding the largest share of 46% in 2025. The segment's dominance is primarily driven by its ability to bridge the gap between visual perception (pixels) and linguistic understanding (text). These models are specifically designed to analyze complex scenes, interpret charts and documents, and derive relationships between various elements. Their dominance is also driven by the large availability of paired image-text datasets, advancements in deep learning architectures like transformers, and strong adoption in AI-powered platforms for improving accessibility, automation, and user engagement.

The video-text vision-language models segment is expected to grow at the fastest CAGR between 2026 and 2035 because they enable more advanced understanding and interaction with dynamic visual content. With the rapid growth of the AI industry, video-text models are increasingly favored for real-time analytics in numerous applications such as surveillance, entertainment, and autonomous vehicles. In addition, the rise in the growth of video content, especially on social media and surveillance platforms, is creating substantial demand for automated analysis tools, contributing to segmental growth.

Deployment Mode Insights

Why did the cloud-based segment dominate the vision-language models market?

The cloud-based segment dominated the market with the highest share of 64% in 2024 because it offers organizations scalable, cost-effective, and flexible access to powerful AI models without the need for heavy upfront investment in hardware. Cloud deployment enables rapid experimentation, seamless updates, and integration with existing AI and data infrastructure, making it easier for enterprises of all sizes to leverage vision-language capabilities. Additionally, the cloud facilitates collaborative development, access to large datasets, and high performance computing resources required for training and running complex models, driving its widespread adoption across industries.

The hybrid segment is expected to expand at the fastest rate in the vision-language models market. This is because it combines the benefits of both on-premises and cloud deployments, offering flexibility, scalability, and control. Hybrid models offer superior performance and higher adaptability for complex, real-world, and edge-computing applications. This approach is particularly attractive for industries like healthcare, finance, and government, where data security is critical but advanced AI capabilities are needed. Additionally, hybrid deployment supports seamless integration with existing IT infrastructure and enables cost optimization, which is driving its increasing adoption.

Industry Vertical Insights

Why did the IT & telecom segment lead the vision-language models market?

The IT & telecom segment led the market, holding a market share of approximately 16% in 2025. This is because IT & Telecom enterprises are the significant end-users of vision-language models (VLMs) to automate network monitoring, analyze complex & unstructured data, enhance security, improve fraud detection, and deliver superior customer service. Telecom companies are widely leveraging AI-powered chatbots and virtual assistant tools to automate customer support and enhance network reliability. Moreover, the rising shift toward edge-based AI for real-time visual analysis and on-device processing without depending on the cloud is sustaining the growth of the segment.

The retail & e-commerce segment is expected to grow at the fastest CAGR over the forecast period, as these enterprises are extensively utilizing vision-language models (VLMs) to transform how digital platforms understand product content and interact with customers. VLMs enhance product discovery, personalized recommendations, improve customer support, and boost overall automated shopping experiences. VLMs through advanced visual search allow customers to upload photos of items to find similar products or receive recommendations. The ability to understand and generate multimodal content enables retailers and e-commerce platforms to deliver more engaging and personalized shopping experiences, driving higher conversion rates and boosting customer satisfaction.

Regional Insights

How Big is the North America Vision-Language Models Market Size?

The North America vision-language models market size is estimated at USD 1.57 billion in 2025 and is projected to reach approximately USD 15.28 billion by 2035, with a 25.55% CAGR from 2026 to 2035.

What made North America the dominant region in the vision-language models market?

North America dominated the vision-language models market by holding the largest share of 42% in 2025. The region's dominance in the market is attributed to its established technological infrastructure and early adoption of AI technology. This leadership position is also attributed to the high demand for multimodal AI, increasing focus on complex reasoning over simple recognition, increasing popularity of Augmented Reality (AR) and Virtual Reality (VR), and supportive government AI initiatives. Moreover, the increasing adoption of vision-language models (VLMs) across various industries, such as autonomous vehicles, healthcare (diagnosis and telemedicine), retail (personalized experiences), robotics, security, and content moderation are anticipated to contribute to the overall growth of the market in the region.

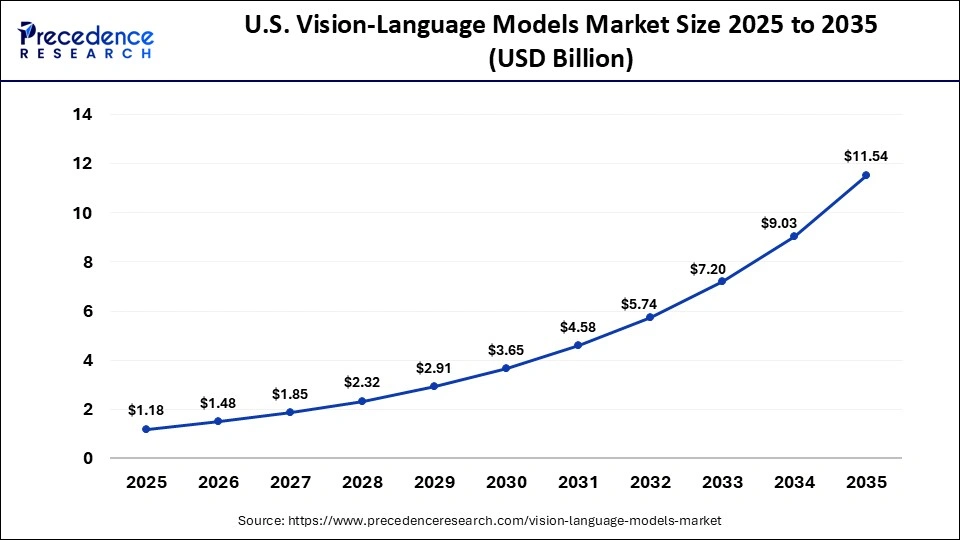

What is the Size of the U.S. Vision-Language Models Market?

The U.S. vision-language models market size is calculated at USD 1.18 billion in 2025 and is expected to reach nearly USD 11.54 billion in 2035, accelerating at a strong CAGR of 25.61% between 2026 and 2035.

U.S. Vision-Language Models Market Analysis

The U.S. leads the North American vision-language models market due to its strong ecosystem of major technology companies, a growing number of AI research institutions and startups, and rapid adoption of AI technologies. The country hosts leading tech giants like Apple, Meta, Oracle, Microsoft, Amazon, NVIDIA, SAP AI, and Google, which drive innovation and commercialization of vision-language models. Supportive government policies and frameworks have further accelerated the deployment of VLMs across diverse applications, including medical image analysis, telemedicine, personalized marketing, visual question answering, image/video description, and enhanced intrusion detection.

In June 2025, NVIDIA announced its new Llama Nemotron Nano VL, a new multimodal vision-language model (VLM) that now leads the OCRBench v2 benchmark, highlighting its accuracy in document analysis across enterprise use cases. It is specifically designed for intelligent document processing. The model reads and extracts data from complex layouts such as invoices, tables, graphs, and dashboards. It combines visual and textual reasoning capabilities, enabling it to parse diverse file types using just a single GPU.

What makes Asia Pacific the fastest-growing area in the market?

Asia Pacific is the fastest-growing region in the market. The region's growth is primarily driven by the vibrant startup ecosystem, rapid pace of digital transformation, growing demand for systems capable of understanding and reasoning across both visual and textual data, increasing investment in AI models from both public and private sectors, and a favorable regulatory environment. Countries such as China, Japan, India, and South Korea are widely adopting vision-language models (VLMs) across various fields like healthcare, education, robotics, retail & e-commerce, and automotive, which is expected to propel the region's growth during the forecast period.

China Vision-Language Models Market Analysis

The market in China is growing, driven by a robust technology ecosystem and the rapid scaling of AI-driven businesses. Key factors include accelerating digitalization, rising demand for multimodal AI, advancements in model compression, increased investment in technological innovation, and government-led AI initiatives. Chinese AI companies and research institutions are focusing on developing models capable of complex, multi-granular, and long-video understanding. The expanding adoption of VLMs across applications such as autonomous driving (advanced driver-assistance systems), smart surveillance, medical imaging analysis, robotics, personalized marketing, and gaming is further fueling the market's growth during the forecast period.

- In December 2025, Chinese AI startup Zhipu AI announced the release of its GLM-4.6V series, a new generation of open-source vision-language models (VLMs) optimized for multimodal reasoning, frontend automation, and high-efficiency deployment. The release includes two models in large and small sizes.

Who are the Major Players in the Global Vision-language Models Market?

The major players in the vision-language models market include NVIDIA, OpenAI, Google DeepMind, Meta , Microsoft, Amazon Web Services (AWS), ByteDance AI Lab, Salesforce Research, SAP AI, Oracle, IBM Research, Apple, Alibaba DAMO Academy, Baidu, Tencent AI Lab, Huawei Cloud AI, Samsung Research, and Adobe Research.

Recent Developments

- In July 2025, Apple researchers, in collaboration with Aalto University in Finland, introduced ILuvUI, a vision-language model trained to understand mobile app interfaces from screenshots and from natural language conversations.

- In July 2025, Apple announced its own visual language model (VLM), ' FastVLM ', that maintains high accuracy while also demonstrating excellent performance in terms of efficiency, making it an AI model suitable for on-device real-time visual query processing.

- In May 2024, Researchers at Meta recently presented ‘an introduction to vision-language modeling, to help people better understand the mechanics behind mapping vision to language. The paper includes everything from how VLMs work to how to train them, and approaches to evaluating VLMs. This approach is more effective than traditional methods such as CNN-based image captioning, RNN and LSTM networks, encoder-decoder models, and object detection techniques.

Segments Covered in the Report

By Model Type

- Image-Text Vision-Language Models

- Image captioning models

- Visual question answering

- Video-Text Vision-Language Models

- Video understanding

- Video summarization

- Document Vision-Language Models (DocVLMs)

- OCR + reasoning

- Layout understanding

- Other Multimodal VLM Types

By Deployment Mode

- Cloud-based

- On-premise

- Hybrid

By Industry Vertical

- IT & Telecom

- BFSI

- Retail & E-commerce

- Healthcare & Life Sciences

- Media & Entertainment

- Manufacturing

- Automotive & Mobility

- Government & Defense

- Other Industries

By Region

- North America

- Europe

- Asia-Pacific

- Latin America

- Middle East & Africa

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

Frequently Asked Questions

Ask For Sample

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

Get a Sample

Get a Sample

Table Of Content

Table Of Content

sales@precedenceresearch.com

sales@precedenceresearch.com

+1 804-441-9344

+1 804-441-9344

Schedule a Meeting

Schedule a Meeting