What is the High-Bandwidth Memory Market Size?

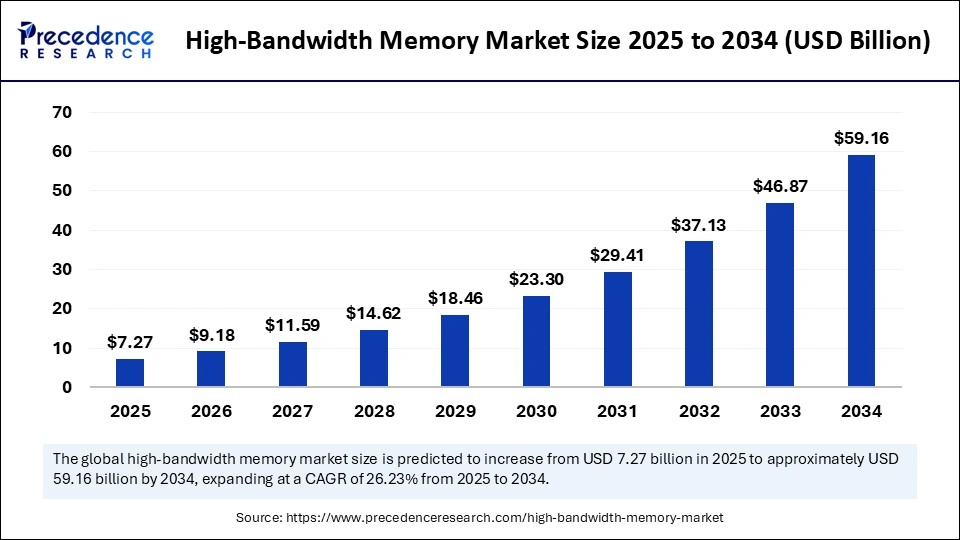

The global high-bandwidth memory market size accounted for USD 7.27 billion in 2025 and is predicted to increase from USD 9.18 billion in 2026 to approximately USD 59.16 billion by 2034, expanding at a CAGR of 26.23% from 2025 to 2034. The high-bandwidth memory market has experienced significant growth due to increasing demand for advanced computing technologies. This type of memory is essential for applications that require rapid data processing and enhanced performance, making it a critical component in various sectors such as artificial intelligence and graphics processing. As innovation continues to drive advancements, the market is expected to evolve further in the coming years.

Market Highlights

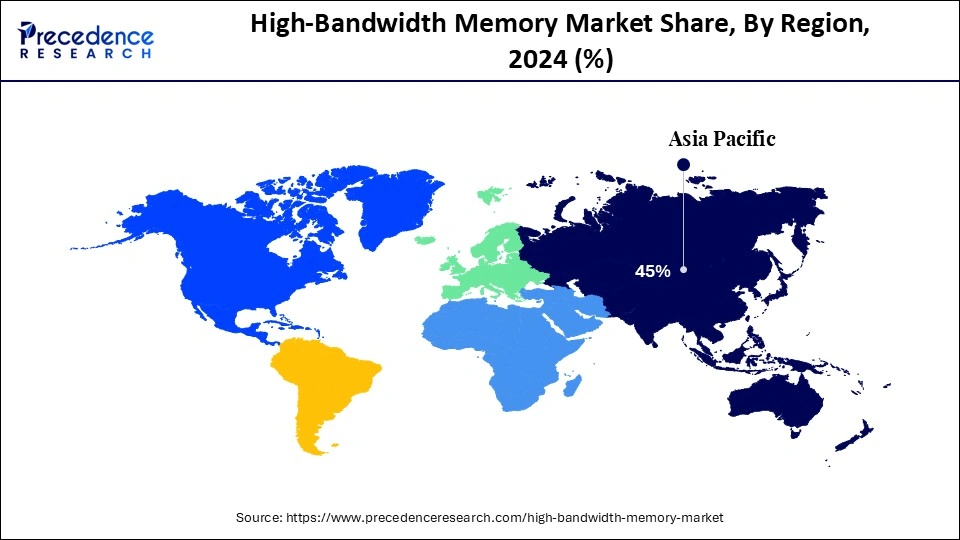

- Asia-Pacific dominated the market, holding largest market share of 45% in the year 2024.

- The North America is expected to expand at the fastest CAGR in the high-bandwidth memory market between 2025 and 2034.

- By application, the graphics processing units segment held the largest market share, accounting for 40% in 2024.

- By application, the artificial intelligence & machine learning segment is expected to grow at a significant CAGR between 2025 and 2034.

- By memory type, the HBM2 segment held the largest market share, at 50%, in 2024.

- By memory type, the HBM3 segment is expected to grow at a remarkable CAGR between 2025 and 2034.

- By end-user type, the semiconductors segment held the largest market share of 60% in 2024.

- By end-user type, the automotive segment is set to grow at a remarkable CAGR between 2025 and 2034.

Market Size and Forecast

- Market Size in 2025: USD 7.27 Billion

- Market Size in 2026: USD 9.18 Billion

- Forecasted Market Size by 2034: USD 59.16 Billion

- CAGR (2025-2034):26.23%

- Largest Market in 2024: Asia Pacific

- Fastest Growing Market: North America

What is the High-Bandwidth Memory Market?

The high-bandwidth memory market encompasses advanced memory technologies that offer higher data transfer rates and greater bandwidth compared to traditional DRAM. HBM achieves this by vertically stacking memory chips and using through-silicon vias (TSVs) to interconnect them, resulting in reduced power consumption and improved performance. HBM is primarily utilized in applications requiring intensive data processing, such as high-performance computing (HPC), artificial intelligence (AI), machine learning (ML), and graphics processing units (GPUs).

The high-bandwidth memory market is rapidly evolving, driven by the increasing demand for high-speed data processing in advanced computing applications. The market was valued highly in 2024 and is expected to continue its upward trajectory in 2025, indicating its strong position in memory technology. Notably, the graphics processing units highlight the critical role of HBM in gaming and graphics-intensive applications. Looking ahead, artificial intelligence and machine learning are predicted to drive notable growth in high-bandwidth memory demand. As innovations continue, the emergence of HBM3 technology is set to further enhance performance and efficiency in various sectors.

Key Technological Shifts in the High-Bandwidth Memory Market

The principal technological innovation in the high-bandwidth memory market is centered around the maturing convergence of 3D-stacked DRAM, advanced interposer/bridge technologies, and heterogeneous integration, which places memory in the most electrically expedient proximity to compute. Die-to-die connections with thousands of vertical vias and wide I/O fabrics dramatically increase effective bandwidth while lowering energy per bit. Simultaneously, advances in silicon interposers, organic substrates, and embedded bridge materials are reducing signal loss and improving thermal conduction. Co-design methodologies where memory, logic, and packaging are architected in tandem are supplanting the historical siloed development models. Additive cooling solutions, such as microfluidic cold plates and vapor chambers, are becoming essential for sustaining higher stack densities. Together, these shifts convert high-bandwidth memory from an exotic performance option into an integrated system enabler for future compute platforms.

High-Bandwidth Memory Market Outlook

- Industry Growth Overview: Industry expansion is driven by the increasing deployment of AI accelerators in cloud and edge data centers, the adoption of high-fidelity graphics in gaming and visualization, and the growing demand for line-rate packet processing in networking. Vendors are racing to deliver higher stack counts, finer nodes, and tighter interposer integration to squeeze performance per watt. Capital investment in advanced packaging and test capability is a gating factor that concentrates production among a few vertically capable players. At the same time, system OEMs are co-designing modules with memory manufacturers to achieve optimal thermal and electrical performance. This co-evolution of memory and compute is creating a virtuous cycle of demand and innovation. Consequently, the industry is characterised by high technical barriers, premium pricing, and a relentless cadence of generational upgrades.

- Sustainability Trends: Sustainability discourse focuses on improving energy efficiency per bit transferred, reducing scrap/yield losses in complex 3D assembly, and optimizing thermal management to lower data center cooling burdens. Suppliers are exploring greener packaging materials, recycled substrate options, and process steps that reduce chemical and water use. Lifecycle assessments are becoming part of procurement dialogues in hyperscalers as they seek to lower their Scope 3 emissions. Energy-aware memory designs that trade marginal bandwidth for substantial power savings are gaining attention. As adoption expands, manufacturers will need to strike a balance between performance aspirations and environmental stewardship.

- Major Investors: Investment flows originate from strategic corporate R&D budgets within semiconductor incumbents, infrastructure-oriented private equity for advanced packaging facilities, and venture capital backing ecosystem enablers such as interposer designers and thermal-management innovators. Hyperscale and system OEMs also make minority equity bets to secure preferential access to roadmap capacity. Public funding in semiconductor sovereignty initiatives occasionally underwrites capacity expansions for critical nodes. Overall, investor interest reflects the long-term strategic value of high-bandwidth memory in enabling future AI and HPC workloads.

- Startup Economy: A modest but catalytic startup ecosystem is emerging around interposer technologies, advanced cooling solutions, test automation, and AI-driven yield enhancement for stacked memories. These nimble firms often partner with larger foundries and OSATs (outsourced assembly/test houses) to pilot novel approaches before scale adoption. While the capital intensity is high, startups that solve discrete packaging or thermal problems can quickly become acquisition targets. Consequently, the startup cohort functions as a rapid-innovation layer that complements the slower cadence of major memory manufacturers.

Market Key Trends in High-Bandwidth Memory Market

- Generational progression to higher stack counts and finer process nodes.

- Closer co-design between memory vendors and AI accelerator architects.

- Growth of heterogeneous integration (chiplet + HBM stacks) for modularity.

- Emphasis on power efficiency and thermal management at the module level.

- Vertical consolidation and strategic partnerships to secure packaging capacity.

Market Scope

| Report Coverage | Details |

| Market Size in 2025 | USD 7.27 Billion |

| Market Size in 2026 | USD 9.18 Billion |

| Market Size by 2034 | USD 59.16 Billion |

| Market Growth Rate from 2025 to 2034 | CAGR of 26.23% |

| Dominating Region | Asia Pacific |

| Fastest Growing Region | North America |

| Base Year | 2025 |

| Forecast Period | 2025 to 2034 |

| Segments Covered | Application, Memory Type, End-User Industry, and Region |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Market Dynamics

Drivers

Bandwidth as the Currency of Computers and Communication

A decisive driver in the high-bandwidth memory market is the axiom that memory bandwidth, not merely compute FLOPS, determines real-world AI and HPC throughput; thus, architectures starved for bandwidth cannot realize their full processor potential. High-bandwidth memory supplies the multi-terabyte/sec conduits that allow accelerators to feed vast models and datasets without crippling stalls. As model sizes and data rates balloon, the premium placed on high-bandwidth local memory escalates correspondingly. This drives OEMs and hyperscalers to prioritize HBM-enabled designs despite cost and supply constraints. The attendant performance uplift justifies investment in specialised packaging and system redesign. In short, bandwidth has become the critical currency of modern computation, and HBM is the primary mint.

Restraint

Cost and Capacity: The Twin Impediments

A primary restraint arises from the high cost of HBM modules, driven by the complexity of 3D stacking, the expense of interposers, and stringent test flows, as well as the limited global capacity for advanced packaging. These economic and industrial bottlenecks restrict diffusion into cost-sensitive segments despite compelling performance advantages. Yield sensitivity in multi-die assemblies increases scrap risk and elevates unit costs, deterring lower-volume OEMs. Supply concentration among a handful of suppliers introduces geopolitical and procurement risks. Additionally, thermal dissipation challenges at scale necessitate investment in novel cooling methods, further increasing system costs. Thus, while technically alluring, adoption of technologies in the high-bandwidth memory market comes at a significant cost and capacity headwinds, which slow down growth in the space.

Opportunity

Democratizing High-Performance Memory

The brightest commercial opportunity lies in reducing the cost curve through process yield improvements, modular high-bandwidth memory architectures, and ecosystem scaling, thereby enabling mid-tier accelerators and edge devices to benefit from elevated bandwidth. Innovations in organic interposers, stacked TSV yield enhancement, and standardised chaplet interfaces can lower entry barriers. Offering lower-cost, lower-stack HBM variants for broad classes of AI inference and graphics workloads can significantly expand the addressable market. Service businesses, thermal retrofit kits, module rework, and certifiable test labs also represent adjacent revenue pools. Partnerships that embed HBM into platform roadmaps will capture disproportionate value. In sum, lowering complexity and cost while preserving meaningful bandwidth is the market's commercial nirvana.

Segment Insights

Application Insights

Why are Graphics Processing Units (GPUs) Dominating the High-Bandwidth Memory Market?

Graphics Processing Units (GPUs) are dominating the high-bandwidth memory market, holding a 40% share. The symbiotic relationship between GPUs and high-bandwidth memory has redefined computational efficiency, enabling faster rendering and enhanced throughput in gaming, 3D design, and high-performance computing. The insatiable appetite for immersive visual experiences, coupled with the rise of ray tracing and real-time simulation, has cemented HBM's indispensability in GPU architectures. Semiconductor giants continue to embed high-bandwidth memory modules to meet escalating memory bandwidth requirements that conventional GDDR systems cannot support. The convergence of GPU acceleration with AI and data analytics further amplifies the need for high-speed, low-latency memory systems. This dynamic synergy ensures that high-bandwidth memory remains the heartbeat of modern GPU innovation.

Conversely, the evolution of GPUs toward heterogeneous computing models continues to expand the role of high-bandwidth memory beyond graphics rendering. The adoption of multi-die packaging and 3D stacking has enabled GPUs to achieve unprecedented levels of memory proximity and data throughput. The growing influence of GPU-driven data centers, particularly for cloud gaming and visualization workloads, has further entrenched high-bandwidth memory as a mission-critical component. Manufacturers are focusing on power efficiency and scalability, ensuring that GPUs equipped with high-bandwidth memory deliver superior performance per watt. This alignment of speed, density, and energy consciousness makes GPUs the undisputed stronghold of the HBM landscape.

Artificial intelligence & machine learning are the fastest-growing in the high-bandwidth memory market, with demand expanding at an exponential pace. As models grow in complexity, the limitations of traditional DRAM architecture become evident, creating a natural pivot toward high-bandwidth, low-latency memory solutions. High-bandwidth memory enables faster model training, lower energy consumption, and seamless data movement across neural network layers, capabilities critical to modern AI infrastructure. The technology's parallel data access mechanism allows GPUs and AI accelerators to handle vast datasets with remarkable efficiency. This has made high-bandwidth memory a cornerstone in AI chip design, from hyperscale deployment to autonomous systems.

The escalating investment in generative AI, edge inference, and deep learning frameworks is further intensifying the adoption of high-bandwidth memory. Chipmakers are integrating advanced memory controllers to optimize throughput while minimizing bottlenecks in AI workloads. Companies focusing on AI accelerators such as custom tensor cores and neuromorphic processors view high-bandwidth memory as essential to achieving computational supremacy. The cascading effect of AI adoption across industries ensures that HBM will continue to be a catalyst for innovation, powering the next generation of intelligent computing systems.

Memory Insights

Why HBM2 is Dominating the High-Bandwidth Memory Market?

The HBM2 dominates the high-bandwidth memory market, holding a 50% share, due to its balance of speed, capacity, and cost efficiency. It revolutionized memory architectures by introducing vertically stacked DRAM dies connected via through-silicon vias (TSVs), dramatically boosting bandwidth while reducing power consumption. This made HBM2 the default choice for high-performance applications, from GPUs and FPGAs to AI training systems. Its architectural maturity and ecosystem support have positioned it as the preferred standard among semiconductor manufacturers. Moreover, HBM2's compatibility with diverse chip platforms ensures its continued relevance across computing paradigms.

HBM2's sustained dominance also stems from its proven performance-to-cost ratio and widespread commercial validation. Its ability to deliver multi-terabyte-per-second throughput enables processors to tackle workloads that were previously deemed intractable. Furthermore, continual enhancements in manufacturing yield and integration techniques have reduced overall production costs, driving broader accessibility. While HBM3 represents the future of ultra-high-speed memory, HBM2 continues to underpin the bulk of today's performance computing hardware, acting as a bridge between established systems and next-generation architectures.

The HBM3 is the fastest-growing high-bandwidth memory, thanks to its unmatched data transfer speeds, scalability, and energy efficiency. With bandwidths exceeding 800 GB/s per stack, it marks a quantum leap in computational throughput, making it ideal for AI, HPC, and exascale computing environments. Its enhanced signaling architecture and thermal optimization enable superior performance even under the most data-intensive conditions. The proliferation of AI supercomputers and advanced data analytics platforms has accelerated its adoption among chipmakers and hyperscalers alike.

As enterprises embrace digital transformation, HBM3's ability to minimize latency and maximize efficiency has made it the de facto choice for next-generation processors. Its integration with chiplet-based architectures and advanced 2.5D/3D packaging techniques underscores the industry's march toward compact, high-performance systems. While still at a premium cost point, the cascading adoption of HBM3 across AI, quantum simulation, and autonomous computing applications signals its inevitable dominance. In essence, HBM3 embodies the industry's pursuit of performance without compromise.

End-User Industry Insights

Why Are Semiconductors Dominating the High-Bandwidth Memory Market?

The semiconductor industry remains the bedrock of the high-bandwidth memory market, accounting for roughly 60% of total demand. As chip architectures evolve toward parallel processing and heterogeneous integration, high-bandwidth memory has become a linchpin for performance optimization. Semiconductor giants leverage high-bandwidth memory to power high-end processors, GPUs, and AI accelerators that define next-generation computing paradigms. Its ability to enable faster data communication between logic and memory has redefined efficiency benchmarks across the ecosystem. Moreover, the integration of high-bandwidth memory into SoCs and multi-chip modules exemplifies the shift toward unified, high-density compute environments.

Semiconductor manufacturers are not merely adopting high-bandwidth memory; they are co-engineering it into their product roadmaps. This deep integration ensures tighter coupling between logic and memory, reducing energy leakage and improving thermal stability. As transistor scaling approaches physical limits, the industry's reliance on advanced memory innovation grows even stronger. With continued advancements in packaging technologies, such as TSVs and interposers, the semiconductor sector's role as the primary driver of HBM growth remains unchallenged.

The automotive sector is rapidly emerging as the fastest-growing adopter of the high-bandwidth memory market, driven by the electrification and digitalization of vehicles. Advanced driver-assistance systems (ADAS), in-vehicle AI, and real-time sensor fusion demand immense computational bandwidth, a requirement tailor-made for high-bandwidth memory architecture. The integration of HBM enables faster processing of radar, lidar, and camera inputs, ensuring split-second decision-making essential for autonomous mobility. As electric and connected vehicles evolve into computers on wheels, memory performance becomes a key differentiator.

Automotive OEMs and Tier 1 suppliers are increasingly collaborating with chipmakers to integrate HBM-enabled processors into vehicle systems. The technology's low latency and high efficiency ensure reliable performance even in extreme environmental conditions. Moreover, HBM's scalability aligns with the growing need for centralized vehicle architectures, where data from multiple subsystems must be processed in real time. The convergence of AI, connectivity, and sustainability in automotive innovation ensures that high-bandwidth memory will remain at the forefront of the future of mobility intelligence.

Regional Insights

Asia Pacific High-Bandwidth Memory Market Size and Growth 2025 to 2034

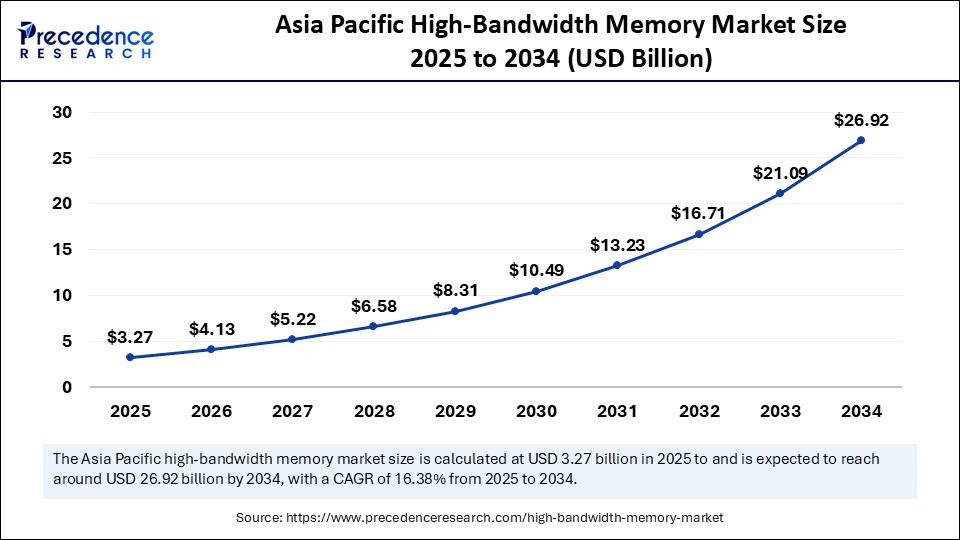

The Asia Pacific high-bandwidth memory market size is evaluated at USD 3.27 billion in 2025 and is projected to be worth around USD 26.92 billion by 2034, growing at a CAGR of 26.38% from 2025 to 2034.

How is Asia Pacific the Rising Star in the High-Bandwidth Memory Market?

Asia Pacific is dominating the high-bandwidth memory market, driven by its deep semiconductor manufacturing base, agile OSAT ecosystem, and substantial capital commitments to advanced packaging capacity. The region's foundries and assembly houses are rapidly scaling wafer-level stacking and interposer production capabilities to meet global demand. Moreover, Asia Pacific's growing cadre of AI hardware start-ups and system integrators is broadening local adoption and accelerating co-design efforts. Cost advantages in fabrication and assembly, combined with a coordinated industrial policy, create fertile ground for rapid capability improvement. As a result, the Asia Pacific is not merely a manufacturing hinterland but an increasingly strategic locus of packaging innovation and scale.

Country Analysis China

China's expansive semiconductor ambitions and manufacturing scale make it a focal point for HBM capacity growth and vertical integration. Investments in OSATs, interposer fabs, and domestic DRAM capability are accelerating, aimed at reducing reliance on external supply chains. With strong domestic demand from cloud providers and AI hardware firms, China is positioning itself to become both a major consumer and producer of high-bandwidth memory solutions contingent on continued technological catch-up and ecosystem partnerships.

How is North America the Fastest Growing in the In High-Bandwidth Memory Market?

North America is the fastest-growing region in the high-bandwidth memory market ecosystem, driven by demand and system integration leadership, anchored by hyperscale cloud providers, AI accelerator designers, and leading GPU/IP houses. The region's strength lies in its end-user demand for the highest-bandwidth memory solutions, which are essential for cutting-edge AI training and inference platforms, combined with deep design capabilities for co-optimized memory-compute stacks. North American firms frequently secure early access to roadmap modules through strategic partnerships, and the concentration of data center demand incentivizes domestic investments in advanced packaging.

Country-level analysis

India is dominating the region, driven by an ecosystem of EDA tools, high-speed signaling experts, and thermal specialists further accelerates design cycles. However, manufacturing and packaging capacity often reside elsewhere, prompting strategic supply agreements and capital deployment to shore up local capability. Consequently, North America leads in demand and system architecture while actively seeking to strengthen upstream fabrication and packaging resilience.

High-Bandwidth Memory Market Value Chain Analysis

- Raw Material Sources: Primary inputs include DRAM-grade silicon wafers, high-reliability bonding materials, copper and tungsten for TSVs, and specialized substrate/interposer materials (silicon or organic). Supply chains also require high-purity chemicals and precision equipment for die thinning and wafer bonding steps.

- Technology Used: Core technologies include TSV formation, wafer-level bonding, micro bumps, silicon interposers, and advanced substrate engineering for signal routing. Complementary tools encompass thermal interface materials and microfluidic cooling integration. Test and verification employ high-speed electrical characterization, built-in self-test protocols, and environmental stress screening.

- Investment by Investors: Investors channel capital into OSAT expansions, interposer fabrication fabs, and specialized packaging start-ups, often via strategic corporate partnerships and long-term offtake agreements with hyperscalers. Infrastructure and industrial funds occasionally co-finance high-capex facilities where sovereign or supply-security objectives align.

- AI Advancements: AI accelerates yield and design optimization through predictive models that forecast TSV failures, optimize thermal paths, and simulate signal integrity across complex interconnect topologies. Machine learning also speeds test-data analytics to triage marginal dies and improve overall assembly yields.

Company-wise Investments in the High-Bandwidth Memory Market

| Company | Approximate Investment Size | Nature / Purpose of Investment |

| SK Hynix | US $74.5 billion | A broad semiconductor investment between now and 2028, ~80% of which is earmarked toward AI / HBM?related areas (capacity expansion, R&D) |

| SK Hynix | US$14.6 billion | Investment in a new fab in South Korea is targeted at boosting HBM output and related memory production capacity. |

| Micron Technology | US$200 billion | Nationwide U.S. initiative combining memory manufacturing and R&D, which includes advanced HBM packaging capabilities as part of the plan. |

| Micron Technology | US$7 billion |

Investment in Singapore for memory chip manufacturing, including a new HBM advanced packaging facility. |

High-Bandwidth Memory Market Companies

- SK Hynix Inc.: SK Hynix pioneered High-Bandwidth Memory technology and remains the dominant supplier of HBM3, powering AI and HPC systems worldwide. Its HBM3E chips are widely integrated into NVIDIA and AMD GPUs, with ongoing innovation focused on next-gen HBM4 and 3D-stacked architectures to enhance data throughput and energy efficiency.

- Samsung Electronics Co., Ltd.: Samsung is a major HBM producer, supplying HBM2E (Flashbolt) and HBM3 (Icebolt) memory for high-performance GPUs and AI accelerators. With deep expertise in DRAM fabrication and advanced 3D packaging, Samsung continues investing in HBM technologies for exascale computing and AI workloads.

- Micron Technology, Inc.: Micron is developing next-generation HBM and GDDR7 memory technologies for AI, machine learning, and high-performance graphics systems. Its HBM3 Gen2 development roadmap aims to achieve higher bandwidth and power efficiency for data center and supercomputing applications.

- Advanced Micro Devices, Inc. (AMD): AMD integrates HBM memory into its Radeon™ GPUs and Instinct™ data center accelerators to reduce latency and improve energy efficiency. The company's collaboration with SK Hynix and Samsung has positioned it as a key innovator in GPU-memory integration for AI and HPC systems.

- NVIDIA Corporation: NVIDIA is the largest consumer of HBM memory, utilizing it extensively across its A100, H100, and upcoming Blackwell GPUs. Through close partnerships with SK Hynix, Samsung, and Micron, NVIDIA drives innovation in HBM technology for AI training, inference, and advanced computing.

Recent Developments

- In October 2025, the investment bank raised its price target for the stock to $220, up from the previous $160, citing stronger-than-expected momentum in core DRAM, a key segment of memory chips. Analysts noted that Micron is likely to experience several consecutive quarters of double-digit price hikes, which they believe will significantly enhance the company's earnings performance. (Source: https://finance.yahoo.com)

- In October 2025, Micron announced the sampling of its next-generation HBM4 memory, achieving a record 2.8 TB/s bandwidth rate, surpassing JEDEC's earlier 2 TB/s target and outperforming competing HBM offerings from Samsung and SK Hynix. Micron also introduced a plan for a customizable “base logic die” (HBM4E variant) to allow customers like Nvidia and AMD to tailor memory stacks, which is expected to improve margins and lock in design win stickines.(Source: https://www.msn.com)

Segments Covered in the Report

By Application

- Graphics Processing Units (GPUs)

- High-Performance Computing (HPC)

- Artificial Intelligence (AI) & Machine Learning (ML)

- Networking & Data Centers

- Automotive (ADAS & Autonomous Driving)

- Consumer Electronics (Gaming Consoles, VR/AR)

- Others (e.g., Medical Imaging, Aerospace)

By Memory Type

- HBM1

- HBM2

- HBM2E

- HBM3

- HBM4

By End-User Industry

- Semiconductors

- Automotive

- Healthcare

- Telecommunications

- Consumer Electronics

- Others

By Region

- North America

- Europe

- Asia-Pacific

- Latin America

- Middle East & Africa

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

Frequently Asked Questions

Ask For Sample

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

Get a Sample

Get a Sample

Table Of Content

Table Of Content

sales@precedenceresearch.com

sales@precedenceresearch.com

+1 804-441-9344

+1 804-441-9344

Schedule a Meeting

Schedule a Meeting