What is the AI Chips for Data Center and Cloud Market Size?

The global AI chips for data center and cloud market enables faster data processing and scalable AI deployment by integrating specialized semiconductors across enterprise and cloud environments.The market is driven by rapid technological advancements in parallel processing architectures and specialized AI accelerators such as NPUs, TPUs, and GPUs. The integration of advanced semiconductor manufacturing processes and high-bandwidth memory is leading to the development of faster, more power-efficient, and scalable processors.

Market Highlights

- North America accounted for the largest market share in 2025.

- Asia Pacific is expected to grow at the fastest CAGR between 2026 and 2035.

- By chip type, the GPUs segment held a major market share in 2025.

- By chip type, the ASICs/custom AI accelerators segment is growing at a CAGR from 2026 to 2035.

- By processing type, the training chips segment led the market with the biggest market share in 2025.

- By processing type, the inference-optimized accelerators segment is poised to grow at a solid CAGR between 2026 and 2035.

- By data center type, the hyperscale data centers segment led the market with a major share in 2025.

- By data center type, the cloud service provider data centers segment is growing at the fastest CAGR between 2026 and 2035.

- By application, the AI model training (LLMs, deep learning) segment led the market with the largest share in 2025.

- By application, the generative AI workloads segment is expanding at the fastest CAGR between 2026 and 2035.

What is the for Data Center and Cloud Market?

The AI chips for data center and cloud market encompass specialized hardware components, known as AI accelerators, designed to efficiently process the vast and complex computational demands of artificial intelligence and machine learning workloads. AI chips use parallel processing architectures such as GPUs and ASICs to handle large numbers of calculations simultaneously, which is essential for training and running large AI models. These chips are deployed in large-scale facilities, particularly hyperscale and cloud data centers, to enable services such as natural language processing, computer vision, real-time analytics, and generative AI applications.

Key Technological Shift in the AI Chips for Data Center and Cloud Market

The AI chips for data centers and cloud market is shifting toward specialized, high-performance chips like Nvidia's Blackwell and Rubin, which are custom ASICs with massive memory and bandwidth for both training and faster inference. This shift moves beyond general GPUs, driven by demand for advanced AI and large models, with heavy investment in new architecture, advanced packaging, and ecosystem support. Combining CPUs, GPUs, and NPUs on a single platform aims to optimize performance across diverse AI workloads. Chiplet designs, advanced packaging, and high-speed interconnects are essential for scaling performance and efficiency.

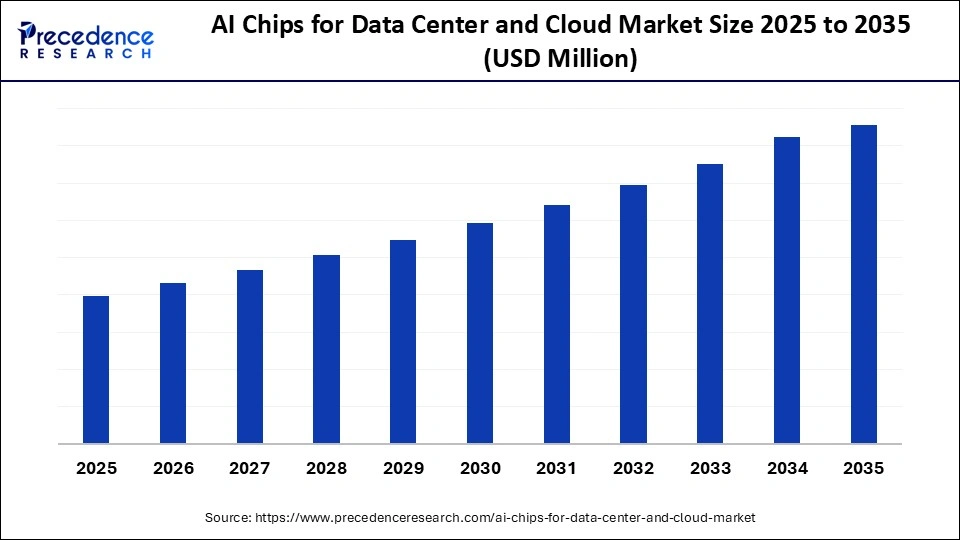

AI Chips for Data Center and Cloud Market Outlook

- Industry Growth Overview: The market is poised for rapid growth from 2026 to 2035. This growth is primarily driven by the increasing demand for high performance computing, AI-driven applications, and cloud services. The rising need for faster data processing, enhanced machine learning capabilities, and the proliferation of AI applications across industries also drive the market.

- Global Expansion: The market is growing worldwide due to the increasing demand for faster data processing, AI-driven applications, and the expansion of cloud services across industries. Emerging regions, particularly in Asia-Pacific and Latin America, offer opportunities driven by expanding digital infrastructure, rising AI adoption, and the growing need for efficient computing solutions across sectors such as healthcare, manufacturing, and finance.

- Major Investors: Many cloud giants, including Google, AWS, Microsoft, and Meta, are investing heavily in custom ASICs and CPUs to optimize their cloud infrastructure and reduce reliance on external suppliers. Also, firms like SoftBank Vision Fund, Andreessen Horowitz, and Sequoia Capital are focusing on both third-party and custom silicon solutions.

- Startup Ecosystem: Many startups, such as Cerebras Systems, are building wafer-scale processors for massive AI model training, and Groq is developing Language Processing Units for ultra-low-latency inference, while also utilizing in-memory computation for edge applications.

Market Scope

| Report Coverage | Details |

| Dominating Region | North America |

| Fastest Growing Region | Asia Pacific |

| Base Year | 2025 |

| Forecast Period | 2026 to 2035 |

| Segments Covered | Chip Type, Processing Type, Data Center Type, Application, and Region |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Segment Insights

Chip Type Insights

What Made GPUs the Leading Segment in the AI Chips for Data Center and Cloud Market?

The GPUs (graphics processing units) segment led the market with the largest share in 2025. This is mainly due to their unique parallel processing architecture, strong software ecosystems like NVIDIA's CUDA, and the ability to manage the increasing computational demands of complex AI models. GPUs are able to be scaled to form supercomputers with high-speed interconnects and advanced networking, enabling massive AI systems capable of exaFLOPS performance. Large AI models, especially large language models, now contain billions or trillions of parameters, requiring enormous memory and computing power, which GPUs provide.

The ASICs/custom AI accelerators segment is expected to grow at the fastest rate during the projection period due to their superior energy efficiency, performance optimization for specific AI tasks, and cost benefits at scale compared to general-purpose GPUs. With fixed, optimized logic, ASICs provide lower latency and higher throughput for stable AI algorithms. Hyperscalers are motivated to develop their own custom silicon to better integrate with proprietary software, maintain confidentiality, and reduce reliance on external vendors and their pricing structures.

Processing Type Insights

How Does the Training Chips Segment Dominate the AI Chips for Data Center and Cloud Market?

The training chips segment dominated the market in 2025. This is mainly because developing and refining advanced AI models requires immense computational power. Training involves processing large datasets through neural networks that perform billions of calculations simultaneously. As AI models become larger and more complex, they also need more powerful, specialized high-performance training chips to enhance the efficiency and speed of training workloads.

The inference-optimized accelerators segment is expected to grow at the fastest CAGR during the forecast period. Real-time processing and deployment phases of AI require more computational power than training, driving the need for energy-efficient, cost-effective hardware. There is growing pressure to process AI tasks closer to data sources to reduce latency, bandwidth consumption, and improve data privacy, which further contributes to segmental growth.

Data Center Type Insights

Why Did the Hyperscale Data Centers Segment Lead the AI Chips for Data Center and Cloud Market?

The hyperscale data centers segment led the market in 2025 due to their ability to handle vast amounts of data and deliver high-performance computing at scale. These data centers, designed for massive processing power and efficiency, are critical to supporting AI workloads, machine learning, and large-scale cloud applications. The increasing demand for data-driven services, such as AI-driven analytics and real-time processing, has fueled the growth of hyperscale facilities.

The cloud service provider data centers segment is expected to grow at the fastest CAGR in the coming years. This is mainly due to the rapid expansion of cloud-based AI services and the increasing demand for scalable, on-demand computing resources. They support AI and generative AI workloads that require significant computational power and scalability. Major providers like AWS, Google Cloud, and Microsoft Azure are continually expanding their capacity, investing in high-performance GPUs, TPUs, and custom accelerators to meet rising demand for AI-as-a-Service and cloud-based AI solutions.

Application Insights

How Does the AI Model Training (LLMs, Deep Learning) Segment Dominate the AI Chips for Data Center and Cloud Market?

The AI training model (LLMs, deep learning) segment dominated the market in 2025. This is mainly due to the growing demand for processing power required to train complex AI models, such as large language models (LLMs) and deep learning networks. These models require massive computational resources, including high-performance AI chips that can accelerate training and optimize efficiency. As industries continue to adopt AI for tasks like natural language processing, computer vision, and autonomous systems, the need for specialized hardware like GPUs, TPUs, and other AI chips has surged.

The generative AI workloads segment is expected to grow at the fastest CAGR during the forecast period. These workloads require unprecedented computational power and advanced hardware that traditional data centers are unable to efficiently provide. The application of GenAI is rapidly expanding into sectors such as healthcare, finance, retail, and entertainment, driven by the growing need to train and deploy large language models and other complex AI systems to meet increasingly stringent performance requirements.

Regional Insights

What Made North America the Dominant Region in the AI Chips for Data Center and Cloud Market?

North America led the market with the largest share in 2025, fueled by technological innovation, extensive infrastructure investments, a robust talent pool, and supportive government policies. The region hosts major hyperscale operators such as AWS, Google Cloud, Microsoft Azure, and Meta, all of whom are key investors in data center infrastructure. Countries like the U.S. and Canada facilitate multi-billion-dollar data center expansions, with leading tech firms investing billions each year in AI infrastructure. Initiatives like the U.S. CHIPS Act provide significant federal subsidies to strengthen domestic AI chip supply chains, ensuring excellent connectivity for real-time AI applications.

U.S. AI Chips for Data Center and Cloud Market Analysis

The U.S. holds a dominant position in the global market, housing leading AI chip designers such as NVIDIA, Intel, and AMD, which create advanced GPUs and custom processors essential for AI workloads in data centers. Major U.S.-based cloud service providers like AWS, Microsoft Azure, and Google Cloud also fuel global demand and innovation in data center architecture, often designing their own tailored AI hardware and making significant investments in data centers worldwide.

Why is Asia Pacific Considered the Fastest-Growing Region in the AI Chips for Data Center and Cloud Market?

Asia Pacific is poised for the fastest growth in the market, driven by substantial investments from hyperscalers, a large and rapidly digitizing population, and supportive government policies promoting high-performance, AI-ready infrastructure. Data residency and sovereignty laws in countries such as India, Indonesia, and Malaysia that mandate local data storage are fueling the construction of regional data centers. Additionally, investments in submarine cable networks are enhancing both regional and international connectivity, positioning Asia Pacific as a key hub for data transfer and making it a critical gateway to global markets.

India AI Chips for Data Center and Cloud Market Analysis

The market in India is growing in India due to several key factors, including the country's rapidly expanding digital infrastructure, the rise of AI-driven applications across various industries, and strong government initiatives promoting technology adoption. India's growing tech ecosystem, fueled by the rise of cloud computing and data analytics, demands high-performance AI chips to support tasks such as machine learning, big-data processing, and real-time decision-making. Additionally, government policies focused on enhancing digital transformation, such as the Digital India initiative, are encouraging investment in AI-ready infrastructure and data centers.

What Makes Europe a Notably Growing Area in the AI Chips for Data Center and Cloud Market?

Europe is a notably growing region in the market due to strong investments in AI research, advanced digital infrastructure, and the presence of major technology and cloud service providers. Supportive government initiatives and funding programs encourage the adoption of AI-ready data centers, while strict data privacy and sovereignty regulations drive the development of local infrastructure. Europe's focus on industrial automation, smart cities, and AI-driven services across sectors such as healthcare, finance, and manufacturing is boosting the demand for high-performance AI chips in data centers and cloud environments. Moreover, the region is home to many world-class AI research institutions and a growing pool of skilled talent, essential for driving innovation in the AI and data center sectors.

Germany AI Chips for Data Center and Cloud Market Analysis

Germany stands out as a leader in the market in Europe, driven by its focus on industrial AI and IoT applications, along with a strong emphasis on sustainability and data sovereignty. As the largest data center hub, Frankfurt serves as a key hub, supported by robust industrial demand for digital transformation. Major companies such as Siemens, Bosch, and Infineon leverage AI chips for applications including predictive maintenance and smart manufacturing, reinforcing Germany's leadership in the region.

What Potentiates the AI Chips for Data Center and Cloud Market within South America?

The market in South America is fueled by rapid cloud adoption, increasing demand for AI-powered services, significant investments in digital infrastructure by global technology giants, and supportive government policies. The region has a large, digitally engaged population, a strategic location for nearshoring, and plentiful renewable energy resources. Major global cloud providers, including AWS, Microsoft Azure, and Google Cloud, are actively expanding their presence by establishing new cloud regions and hyperscale data centers to deliver low-latency, local services. Additionally, the region's focus on improving connectivity and modernizing IT infrastructure creates opportunities for AI chip adoption in both enterprise and cloud environments.

Brazil AI Chips for Data Center and Cloud Market Analysis

Brazil is emerging as the leading data center hub in Latin America, hosting nearly half of all planned and existing data centers in the region. It is attracting investments from global companies because of its strong connectivity through many submarine cables and internet exchange points. Government initiatives to improve digital infrastructure, coupled with investments in local data centers to meet data sovereignty requirements, are further fueling the market. Additionally, Brazil's expanding tech ecosystem and growing need for high-performance computing to support AI workloads are driving the adoption of advanced AI chips in data centers and cloud environments.

What Opportunities Exist in the Middle East & Africa for the AI Chips for Data Center and Cloud Market?

The Middle East & Africa (MEA) offers significant opportunities in the market. These opportunities arise from strategic government initiatives for economic diversification, abundant capital and energy resources, a strategic geographic location, and growing local demand for AI-driven solutions. Countries like the UAE and Saudi Arabia have launched national AI strategies and smart city programs to diversify their economies beyond oil dependence. High internet penetration rates and the adoption of 5G networks are fueling demand for local data storage and processing.

UAE AI Chips for Data Center and Cloud Market Trends

The market is growing in the UAE due to the country's strong focus on digital transformation, cloud adoption, and smart city initiatives. Government-led programs, such as the UAE AI Strategy, are promoting AI integration across sectors like healthcare, finance, and transportation, driving demand for high-performance AI chips. Additionally, investments in state-of-the-art data centers, hyperscale infrastructure, and advanced connectivity solutions are fueling the adoption of AI-ready hardware to support cloud services and enterprise applications.

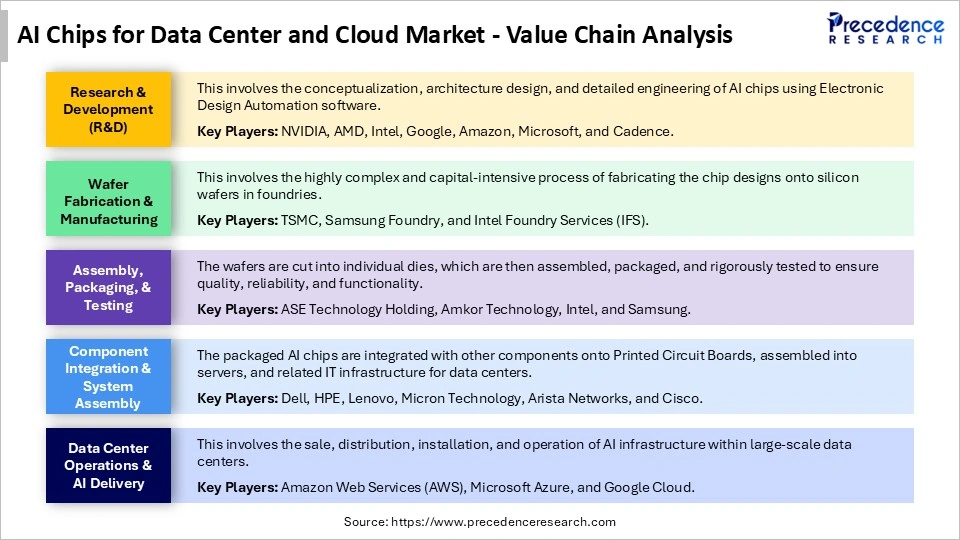

AI Chips for Data Center and Cloud Market Value Chain

Top Companies in the AI Chips for Data Center and Cloud Market & Their Offerings

- NVIDIA: Offers high-performance GPUs like the H100/H200 and the newer Blackwell B200/GB200 series, which are the foundational hardware for most AI training and inference.

- AMD: Offers instinct MI300X and the upcoming MI400 series accelerators, designed for high-performance computing (HPC) and large language model (LLM) training.

- Intel: Focusing on end-to-end AI solutions and leveraging its vast manufacturing capabilities with offering Gaudi 3 AI accelerators for deep learning.

- Google: Offer tensor Processing Units (TPUs), custom-designed ASICs optimized specifically for Google's TensorFlow framework and AI workloads.

- Amazon Web Services (AWS): The largest cloud provider offering trainium chips for AI model training and Inferentia chips for high-efficiency, low-latency inference workloads.

Other Key Players

- Microsoft (Azure)

- Meta Platforms

- Cerebras Systems

- Groq

- Huawei

Recent Developments

- In December 2025, Marvell Technology announced its acquisition of Celestial AI to enhance its connectivity strategy for AI and cloud data centers. This acquisition aims to advance multi-rack configurations with high-bandwidth, low-latency architectures, positioning Marvell as a leader in AI connectivity and becoming the next frontier in AI infrastructure, as remarked by Matt Murphy, Chairman and CEO of Marvell.(Source: https://investor.marvell.com)

- In June 2025, AMD unveiled its integrated AI platform and scalable rack-scale AI infrastructure at its Advancing AI event. Dr. Lisa Su, AMD's CEO, highlighted the company's rapid AI innovation, marked by the launch of the AMD Instinct MI350 series accelerators, which deliver a 4x increase in AI compute and a 35x leap in inferencing capabilities, enabling transformative solutions in generative AI and high-performance computing.(Source: https://ir.amd.com)

- In October 2025, Qualcomm Technologies launched its next-generation AI inference solutions, the Qualcomm AI200 and AI250 accelerator cards. These products offer rack-scale performance, high memory capacity, and efficiency for AI workloads, with the AI250 featuring a near-memory computing architecture that boosts effective memory bandwidth and reduces power consumption.(Source: https://www.qualcomm.com)

Segments Covered in the Report

By Chip Type

- GPUs (Graphics Processing Units)

- CPUs with AI acceleration

- FPGAs (Field Programmable Gate Arrays)

- ASICs/Custom AI Accelerators

- NPUs/TPUs

By Processing Type

- Training chips

- Inference chips

- Inference-optimized accelerators

By Data Center Type

- Hyperscale data centers

- Enterprise data centers

- Colocation data centers

- Cloud service provider data centers

By Application

- AI model training (LLMs, deep learning)

- Natural language processing

- Computer vision

- Recommendation engines

- Speech recognition

- Generative AI workloads

By Region

- North America

- Europe

- Asia-Pacific

- Latin America

- Middle East and Africa

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

Frequently Asked Questions

Ask For Sample

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

Get a Sample

Get a Sample

Table Of Content

Table Of Content

sales@precedenceresearch.com

sales@precedenceresearch.com

+1 804-441-9344

+1 804-441-9344

Schedule a Meeting

Schedule a Meeting