What is Artificial Intelligence (AI) Chipsets Market Size?

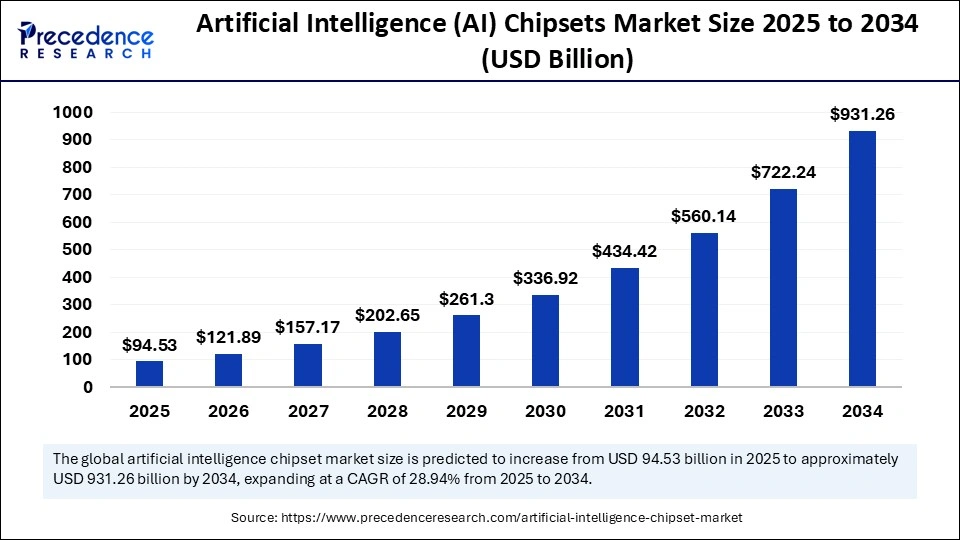

The global artificial intelligence (AI) chipsets market size accounted for USD 94.53 billion in 2025 and is predicted to increase from USD 121.89 billion in 2026 to approximately USD 931.26 billion by 2034, expanding at a CAGR of 28.94% from 2025 to 2034. The market growth is attributed to surging demand for energy-efficient, high-performance processors to support advanced AI applications across cloud, edge, and embedded systems.

Market Highlights

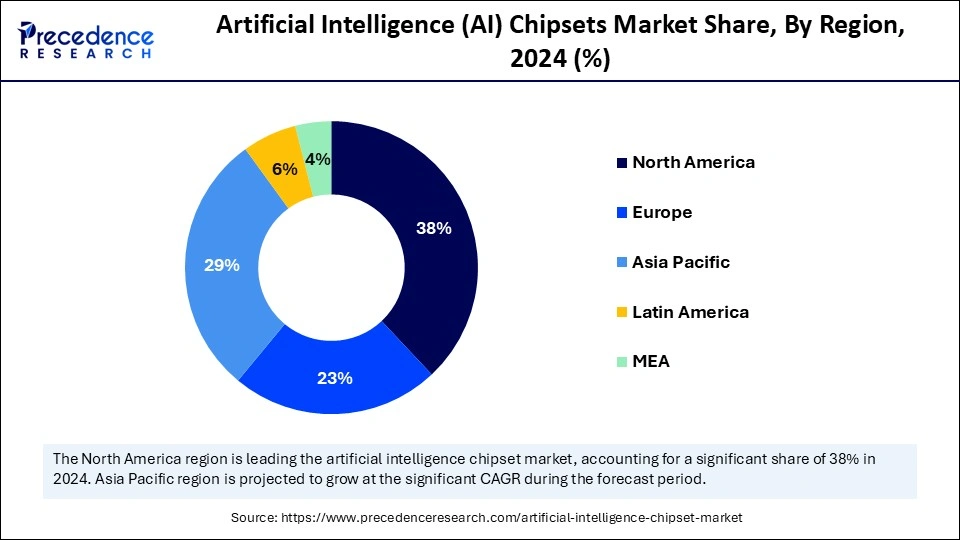

- North America dominated the artificial intelligence (AI) chipsets market with the largest share of 38% in 2024.

- Asia Pacific is expected to grow at the fastest CAGR between 2025 and 2034.

- By chipset type, the GPU (graphics processing unit) segment held the major market share of 36% in 2024.

- By chipset type, the ASIC (application specific integrated circuit) segment is expected to grow at a significant CAGR between 2025 and 2034.

- By technology node, the 7-10nm segment contributed the biggest market share in 2024, accounting for 40% share.

- By technology node, the <7nm segment is expected to expand at a significant CAGR between 2025 and 2034.

- By processing type, the cloud AI processing segment captured the highest market share of 52% in 2024.

- By processing type, the edge AI processing segment is expected to grow at a significant CAGR over the projected period.

- By functionality, inference chipsets held the largest market share of 58% in 2024.

- By functionality, the training chipsets segment is expected to grow at a notable CAGR from 2025 to 2034.

- By deployment mode, the cloud-based segment generated the major market share of 63% in 2024.

- By deployment mode, the on-premises segment is expected to grow at a notable CAGR from 20245 to 2034.

- By end-use industry, the consumer electronics segment accounted for the significant market share of 29% in 2024.

- By end-use industry, the automotive segment is projected to grow at the highest CAGR between 2025 and 2034.

How the Artificial Intelligence (AI) Chipsets are Transforming?

The artificial intelligence (AI) chipsets market refers to the industry focused on designing, manufacturing, and deploying specialized semiconductor hardware optimized for processing AI workloads. These chipsets are engineered to accelerate tasks such as machine learning, deep learning, natural language processing, and computer vision. Unlike traditional CPUs, AI chipsets include GPUs, TPUs, FPGAs, and ASICs, offering enhanced parallel processing capabilities and reduced latency, enabling faster and more efficient execution of complex AI algorithms across end-use sectors such as automotive, healthcare, consumer electronics, industrial automation, BFSI, and data centers.

Increasing demand for high-performance computing with generative AI, autonomous systems, and large language models is leading to the swift adoption of artificial intelligence (AI) chipsets all over the world. The importance of AI chip development was also established, according to a 2024 U.S. Department of Commerce semiconductor innovation brief, to address the issues of national competitive dominance and supply-chain bottlenecks that existed around emerging technologies using the CHIPS and Science Act. Furthermore, the deployment of AI chipsets on the edge is also growing, and organizations like IEEE prioritize on-device intelligence in terms of autonomous vehicles as well as industrial robotics.

Artificial Intelligence (AI) Chipsets Market Growth Factors

- Rising Adoption of AI in Drug Discovery: AI-driven predictive modeling and bioinformatics are fueling the need for high-performance chipsets in pharmaceutical R&D pipelines.

- Growing Emphasis on Autonomous Mobility: Expanding investments in autonomous vehicles and ADAS systems are driving demand for edge-optimized AI chipsets with real-time processing capabilities.

- Boosting Demand from Language and Vision Models: The widespread deployment of large language models (LLMs) and computer vision platforms is propelling the development of specialized AI accelerators.

- Expanding AI Integration in Industrial Robotics: The automation of manufacturing workflows and smart factories is boosting the adoption of AI chips for precision control and decision-making.

- Driving Use of AI in Cybersecurity Infrastructure: Increasing cyber threats are increasing the demand for AI chipsets capable of enabling real-time threat detection and adaptive defense systems.

Artificial Intelligence (AI) Chipsets Market Outlook:

- Global Expansion: An accelerating need for high-speed processing for machine learning, the growth of autonomous systems, and expanded investments in AI research and development are promoting the market expansion.

- Major Investor: In September 2025, Nvidia invested $5 billion in Intel to co-develop AI infrastructure and PC chips.

- Startup Ecosystem: SambaNova Systems, a startup that develops Reconfigurable Dataflow Units (RDUs) and the DataScale platform.

Market Scope

| Report Coverage | Details |

| Market Size in 2025 | USD 94.53 Billion |

| Market Size in 2026 | USD 121.89 Billion |

| Market Size by 2034 | USD 931.26 Billion |

| Market Growth Rate from 2025 to 2034 | CAGR of 28.94% |

| Dominating Region | North America |

| Fastest Growing Region | Asia Pacific |

| Base Year | 2024 |

| Forecast Period | 2025 to 2034 |

| Segments Covered | Chipset Type, Technology Node, Processing Type, Functionality, Deployment Mode, End-Use Industry, and Region |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Market Dynamics

Drivers

How is the Growing Demand for AI Applications Drive Market Growth?

The increasing adoption of AI-powered applications across industries is expected to drive the growth of the artificial intelligence (AI) chipsets market. Industries, including the automotive, healthcare, finance, and logistics sectors, increasingly use AI for real-time analytics, decision-making, and even automation. This pattern is aggravating the requirements of hardware with a high capacity to process large quantities of information with minimal latencies at optimized energy consumption. Chipsets that enhance optimal use of the inference and training tasks, particularly in mission-critical situations, are a priority for manufacturers.

As the enterprise use cases increase in complexity and growth, there is an ever-increasing demand for scalability and application-specific chips. According to reports Bloomberg made in 2024, automotive industry giants, such as Tesla and BMW, significantly improved AI in self-driving and driver-assistance technology, leading towards new, automotive-grade, reliable chipsets. In 2024, MIT Technology Review noted that healthcare startups implementing AI in diagnostics and drug discovery were experiencing a high level of dependency on proficient AI hardware, particularly for on-premise and real-time imaging. Furthermore, the high integration of AI into consumer electronics is likely to increase the volume requirement for versatile AI chipsets, thus further fueling the market.

Restraint

Supply Chain Disruptions and Geopolitical Tensions

Supply chain disruptions and geopolitical tensions hamper the consistent production and delivery of AI chipsets, further hampering the growth of the artificial intelligence (AI) chipsets market. Current trade restrictions, export controls, and poor relationships with major economies, such as the U.S. and China bring about the uncertainty that surrounds semiconductor supply chains. Such tensions limit access to vital raw materials, advanced fabrication hardware, and vital intellectual property. Furthermore, the high development and fabrication costs are anticipated to limit participation from smaller players in the market.

Opportunity

Why are Edge AI Innovations Becoming Critical for the Growth of the Artificial Intelligence (AI) Chipsets Market?

Surging investment in edge computing technologies is projected to create immense opportunities for market players. The massive investment in edge computing technologies is also likely to increase the demand for low-power AI chipsets. The Edge AI minimizes latency, bandwidth constraints, and improves data privacy by allowing the processing to be near the source. Business enterprises are working on the concept of producing edge-optimized hardware that facilitates fast inference and thermally efficient work. The rise of Industry 4.0 and smart cities, and ubiquitous computing reminds us of the relevance of local AI computation.

- In August 2025, South Korean AI chip startup, FuriosaAI, has joined the ranks of the country's unicorn companies after securing approximately ?170 billion KRW (~$130 million) in a Series C bridge funding round. Supported by more than 40 institutional and private investors, including Korea Development Bank and Kakao Investment, the funding represents a significant milestone for Korea's deep tech industry. FuriosaAI aims to scale up mass production of its AI processor, RNGD, while fast-tracking the development of next-generation semiconductor technologies. Moreover, the Rising competition among semiconductor companies is estimated to stimulate innovation and diversification in AI chipset development, further propelling the market in the coming years.

Segment Insights

Chipset Type Insights

Why Did the GPU Segment Dominate the Market in 2024?

The graphic processing unit (GPU) segment dominated the artificial intelligence (AI) chipsets market, accounting for an estimated 36% share in 2024, primarily due to its parallel processing capabilities. This makes GPUs ideal for extensive training and inference tasks in data centers and cloud environments. Key AI applications, including generative models, computer vision, and natural language processing, depend heavily on GPUs for their ability to run numerous threads concurrently. Companies like NVIDIA, AMD, and Intel have enhanced GPU architectures to meet the demands of deep learning frameworks, further solidifying the GPU's critical role in the AI landscape.

The H100 Tensor Core GPU gained significant traction in 2024, especially for transformer model training, which is crucial for industries using generative AI. Cloud providers like AWS, Microsoft Azure, and Google Cloud strengthened their positions by expanding their GPU-accelerated infrastructure. Data centers also increased their use of GPUs to support large language models from companies like OpenAI and Meta, further driving the segment's growth. This widespread adoption underscores the GPU's importance in advancing AI technologies.

The ASIC (application specific integrated circuit) segment is expected to grow at the fastest CAGR in the coming years due to its superior efficiency and performance in specific AI functions, especially in inference-heavy environments. These chipsets offer better power efficiency and smaller sizes compared to general-purpose hardware, making them ideal for edge devices and enterprise AI applications. Major players like Google (TPUs), Amazon (Inferentia), and Huawei are investing heavily in ASIC development to enhance cloud-native applications, further driving demand for this segment. This strategic focus highlights the ASIC's growing importance in the AI landscape.

Technology Node Insights

How Does the 7-10nm Segment Lead the Market in 2024?

The 7-10nm segment led the artificial intelligence (AI) chipsets market while holding a 40% share in 2024, driven by its performance, power efficiency, and manufacturing scalability. Accelerators based on 7-10nm designs offer good thermal characteristics and sufficient transistor density for complex training and inference tasks. Cloud providers favored these components for upgrading their hyperscale infrastructure due to their good yields and compatibility with existing server platforms. This widespread adoption highlights the segment's critical role in the market.

The <7nm segment is expected to grow at the fastest CAGR in the coming years due to its high transistor density, improved power consumption, and increased performance in AI models. New process technologies, such as 5nm and 3nm, offer much faster data throughput, which is crucial for large-scale training and inference. This rapid shift to ultra-fine nodes highlights the market's focus on reducing latency, increasing model capacity, and improving compute efficiency.

Companies like TSMC, Intel, and Samsung are increasing their production of <7nm chips to meet the rising demand. In 2024, NVIDIA and Apple adopted 5nm and under-5nm chipsets for high-end AI platforms and next-generation consumer products. This rapid shift to ultra-fine nodes highlights the focus on reducing latency, increasing model capacity, and improving compute efficiency.

Processing Type Insights

What Made Cloud AI Processing the Dominant Segment in the Artificial Intelligence (AI) Chipsets Market?

The cloud AI processing segment dominated the market with about 52% share in 2024. High-performance AI infrastructure in the cloud enables scaling in training and deployment of models across applications like natural language processing and computer vision. Hyperscale cloud vendors, including AWS, Google Cloud, and Microsoft Azure, have been investing heavily in AI-optimized data centers.

- In 2024, Bloomberg noted that producers of large language models like OpenAI and Meta heavily utilize cloud AI processing, driven by the need to train and use massive, multi-trillion-parameter models. Google announced its AI Hypercomputer, described by NBC as blending fast TPUs with a rapid interconnect structure to improve the multi-node training process. Furthermore, prominent chipmakers, including NVIDIA and AMD, ramped up the production of database-grade accelerators, such as the H100 and MI300, further solidifying the segment's dominance.

The edge AI processing segment is expected to grow at the fastest rate in the coming years, driven by the need for low-latency, on-device intelligence for real-time applications. On-edge processing of AI systems in autonomous vehicles, industrial robots, wearables, and surveillance systems requires power efficiency and independence from external servers. Investments in smart city infrastructure have rapidly increased edge deployments in traffic monitoring and energy management systems, further boosting the market.

Functionality Insights

Why Did the Inference Chipsets Segment Lead the Market in 2024?

The inference chipsets segment led the market, holding the largest share of about 58% in 2024, due to their low-latency processing and energy-efficient execution of AI models. Chipmakers focused on creating AI accelerators that reduce response times by minimizing the need to contact cloud servers. Meta and Amazon deployed custom inference silicon in their data centers to handle billions of low-latency queries daily, further supporting the adoption within functional AI systems.

The training chipsets segment is expected to grow at the fastest CAGR over the forecast period, owing to the increasing investments by enterprises and research institutes in developing large models. Training chipsets are computationally focused on matrix operations needed to create training foundational AI, including large language models (LLMs), diffusion models, and multimodal AI solutions. Moreover, the sovereign AI projects in Europe, China, and the Middle East have sparked demand among their organizations in domestic training clusters with high-performance chipsets.

Deployment Insights

What Made Cloud-Based the Primary Deployment Mode for AI Chipsets?

The cloud-based segment dominated the artificial intelligence (AI) chipsets market in 2024, holding a market share of approximately 63%, as it allowed flexible AI workloads, global access, and the ability to extend the industry and combine the compute resources. The increased infrastructure offered by major cloud service centers to their customers underwent amplification as a result of AI chipset provision, including GPUs, TPUs, and custom ASICs. Furthermore, the corporations, such as Meta, OpenAI, and Salesforce, put into practice in cloud computing settings trillions of parameters to facilitate high-end model training and on-demand inference, thus further fuelling the segment.

The on-premise segment is expected to grow at the fastest CAGR during the projection period due to rising concerns about data security, bandwidth limitations, and AI regulatory compliance. This deployment model is anticipated to gain traction among government agencies, healthcare providers, and financial institutions that require secure, localized AI processing. Furthermore, sectors like biotech, law enforcement, and high-frequency trading are seeing significant demand for the secure, low-latency AI inference capabilities offered by on-premise computing.

End-Use Industry Insights

How Does the Consumer Electronics Segment Lead the Artificial Intelligence (AI) Chipsets Market?

The consumer electronics segment held the largest revenue share of the artificial intelligence (AI) chipsets market in 2024. This is mainly due to the increased proliferation of AI-enabled smartphones, smart speakers, tablets, and wearables, which significantly boosted the demand for smaller, energy-efficient chipsets capable of real-time inferences. Leading device manufacturers integrated custom neural processing units (NPUs) into their premium devices to manage functions like voice recognition, facial unlocking, and smart image processing.

In September 2024, Intel (INTC) introduced two new AI chips, a strategic move to bolster its data center business and more aggressively compete with AMD (AMD) and Nvidia (NVDA). The Xeon 6 CPU and the Gaudi 3 AI accelerator are designed for enhanced performance and greater power efficiency, as Intel aims to become a key player in the expanding AI sector.

NBC reported that the integration of artificial intelligence, including virtual assistants and context-aware functionalities, has become standard in mid-range and high-end devices. The growing demand for intelligent consumer devices, driven by continuous innovation, is expected to further solidify the segment's market position in the coming years.

The automotive segment is expected to grow at the fastest rate in the coming years, driven by the quick adoption of AI in electric and autonomous vehicles. AI applications in vehicles, particularly those focused on safety, are a priority for OEMs and Tier 1 suppliers, with adaptive cruise control and driver behavior monitoring being key. Furthermore, the autonomous driving software requires substantial processing power, leading some automakers to integrate specialized AI-processing hardware like NVIDIA Drive Thor and Qualcomm Snapdragon Ride into their vehicles

Regional Insights

U.S. Artificial Intelligence (AI) Chipsets Market Size and Growth 2025 to 2034

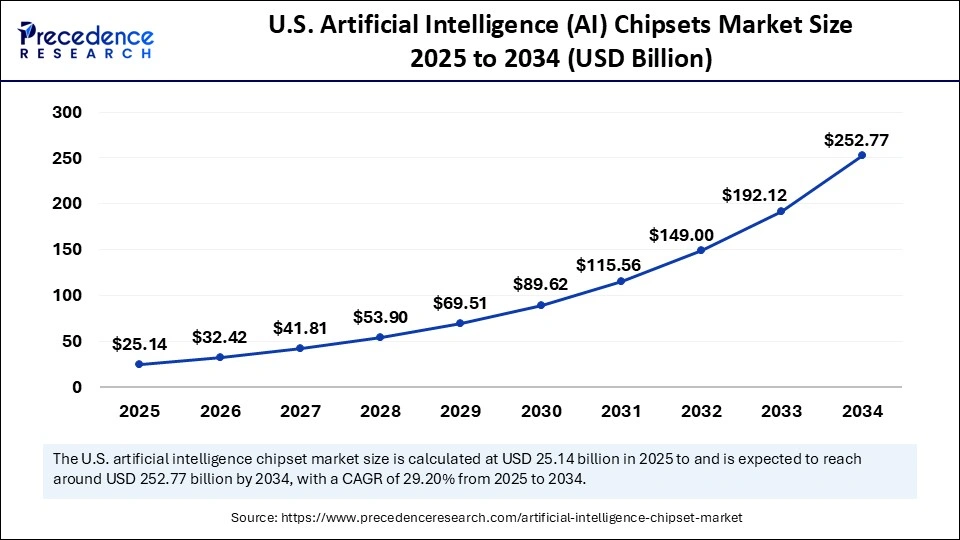

The U.S. artificial intelligence (AI) chipsets market size is exhibited at USD 25.14 billion in 2025 and is projected to be worth around USD 252.77 billion by 2034, growing at a CAGR of 29.20% from 2025 to 2034.

What Made North America the Dominant Region in the Artificial Intelligence (AI) Chipsets Market?

North America dominated the artificial intelligence (AI) chipsets market, capturing the largest revenue share in 2024. This is mainly due to the high concentration on AI research, driving innovation and attracting significant venture capital. Furthermore, the presence of major semiconductor and cloud service enterprises, such as NVIDIA, Intel, and Google, has fostered a robust ecosystem for AI technology. These companies have also significantly scaled their AI infrastructure, solidifying North America's position as a leader in the field.

In 2024, Bloomberg reported that companies like OpenAI, Meta Platforms, and Tesla were significantly increasing their purchases of high-performance chipsets to support large model training, autonomous systems, and generative AI workloads. The U.S. Department of Commerce also boosted funding through the CHIPS and Science Act to bolster domestic semiconductor production, benefiting these companies. Additionally, the rising demand from defense and aerospace sectors for custom chipsets to power AI-driven simulations and edge computing systems further fueled market growth.

Extensive Advanced Packaging Technologies are Encouraging the Asia Pacific

Asia Pacific is expected to experience the fastest growth in the market during the forecast period. The growth of the market in the region is driven by national semiconductor policies, increasing technology adoption, and rapid industrial automation. In 2024, Reuters highlighted that China, South Korea, and Japan are significantly investing in AI chipset design and advanced packaging technologies. The diverse applications of AI in autonomous manufacturing and smart city projects are also expected to fuel growth, positioning Asia Pacific as a global AI chipset hub

Ongoing Technological Breakthroughs: Supports the U.S. Market

A major expansion of the US market has been influenced by immersive technological advances, such as Intel's new Xeon 6 CPUs and Gaudi 3 accelerators, Qualcomm's upcoming data center chips, and the start of US manufacturing for NVIDIA's advanced chips.

Embedded Alliances & Facilities Expansion: Impacts the South Korean Market

Recent progression has been fueled by the emergence of South Korea's government, Samsung, SK Group, and Hyundai are collaborating with Nvidia to gain nearly 260,000 GPUs for AI infrastructure. At the same time, Samsung developed a new facility with over 50,000 Nvidia GPUs to unite AI across its manufacturing processes, employing CUDA-X and cuLitho for digital twins and improving yields.

Exploration of Diversification is Driving Europe

Europe is experiencing a notable growth in the artificial intelligence (AI) chipsets market, with the revolutionary implementations, such as the UK's Graphcore designed specialised Intelligence Processing Units (IPUs), and France's Intel/BMW alliance on AI chips for self-driving cars. Moreover, the European companies, including Unbabel, are bolstering large language models (LLMs) for the region's various languages.

Wider Dedication & New Data Centers: Elevates the France Market

Specifically, the France government plans to devote €1.5 billion to AI research and development through 2025. Whereas, the Core42, a G42 company, invested in France with DataOne to develop a novel AI data center in Grenoble.

Key Players in Artificial intelligence (AI) chipsets market and their Offerings

- Tenstorrent Inc- It primarily provides robust solutions for data centers, automotive, and robotics applications.

- SiFive, Inc.- A vital company leverages various RISC-V processor IP (intellectual property) cores for artificial intelligence (AI) applications, mainly within its SiFive Intelligence family.

- Samsung Electronics Co., Ltd.- It is emphasizing Exynos application processors (APs) for mobile and automotive devices and high-performance memory chips (HBM) for data centers.

- Qualcomm Technologies, Inc.- It usually offers a range of edge devices (smartphones, PCs, IoT, and automotive) and data centers.

- NVIDIA Corporation- It serves diverse AI chips depending on its Ampere, Hopper, Ada Lovelace, and the newest Blackwell architectures.

Recent Developments

- In August 2024, AMD unveiled its new AI accelerator, the Instinct MI325X, designed to directly compete with NVIDIA's data center GPUs. Production is expected to begin by the end of 2024. The company aims to offer a compelling alternative for hyperscalers and enterprise customers, potentially pressuring NVIDIA's pricing strategy, which has supported gross margins of approximately 75%, according to company financials. (Source: https://www.cnbc.com)

- In May 2025, NVIDIA Plans Lower-Cost AI Chipset for China in response to U.S. export restrictions, NVIDIA is preparing to release a China-specific AI chipset based on its Blackwell architecture. Set to launch in May 2025 and enter mass production by June, the GPU will be priced between $6,500 and $8,000, significantly lower than the $10,000–$12,000 of the restricted H20 model. This move enables NVIDIA to retain market share in China while complying with export regulations, as reported by Reuters. (Source: https://www.livemint.com)

- In August 2025, Intel introduced its first AI-enabled system-on-chip (SoC) for software-defined vehicles (SDVs) in August 2025, marking its entry into the automotive AI space. Partnering with Zeekr, Intel's hardware will debut in a production vehicle by the end of the year. The chip focuses on improving voice assistants, in-car safety, and dynamic driving features, supporting the broader shift to AI-driven mobility ecosystems.(Source: https://news.aibase.com)

Latest Announcement by Industry Leader

- In June 2025, Advanced Micro Devices CEO Lisa Su introduced a new artificial intelligence server set for 2026, designed to compete with Nvidia's (NVDA.O) leading products, as OpenAI's CEO announced the ChatGPT developer would begin using AMD's latest chips. Su spoke at the "Advancing AI" developer conference in San Jose, California, highlighting the MI350 series and MI400 series AI chips, which she said will rival Nvidia's Blackwell line of processors. “The future of AI is not going to be built by any one company or in a closed ecosystem. It's going to be shaped by open collaboration across the industry,” Su stated.

(Source: https://www.reuters.com)

Segments Covered in the Report

By Chipset Type

- GPU (Graphics Processing Unit)

- ASIC (Application Specific Integrated Circuit)

- FPGA (Field Programmable Gate Array)

- CPU (Central Processing Unit)

- SoC (System on Chip)

- NPU (Neural Processing Unit)

- TPU (Tensor Processing Unit)

- Others (e.g., analog chips optimized for edge inference)

By Technology Node

- <7nm

- 7–10nm

- 11–28nm

- >28nm

By Processing Type

- Edge AI Processing

- Cloud AI Processing

- Hybrid AI Processing

By Functionality

- Training Chipsets

- Inference Chipsets

By Deployment Mode

- On-Premise

- Cloud-Based

By End-Use Industry

- Consumer Electronics

- Smartphones

- Smart Speakers

- Smart TVs

- Wearables

- Automotive

- Advanced Driver-Assistance Systems (ADAS)

- Autonomous Vehicles

- Healthcare

- Imaging & Diagnostics

- Robotics Surgery

- Virtual Nursing Assistants

- BFSI

- Fraud Detection

- Automated Trading

- Customer Experience

- Industrial

- Predictive Maintenance

- Quality Control

- Retail

- Inventory Management

- Recommendation Engines

- Telecommunication

- Defense & Aerospace

- Data Center

- Others (Education, Agriculture, Logistics)

By Region

- North America

- Europe

- Asia Pacific

- Latin America

- Middle East &Africa

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

Frequently Asked Questions

Ask For Sample

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

Get a Sample

Get a Sample

Table Of Content

Table Of Content

sales@precedenceresearch.com

sales@precedenceresearch.com

+1 804-441-9344

+1 804-441-9344

Schedule a Meeting

Schedule a Meeting